Vastrik

Vastrik

My home server setup changes every 3-4 years. In Siberia, I had a big old computer in the closet, in Lithuania I got by with ready-made NAS solutions from WD and Synology, then moved everything to a data center, and in recent years I've been running a Raspberry Pi cluster at home.

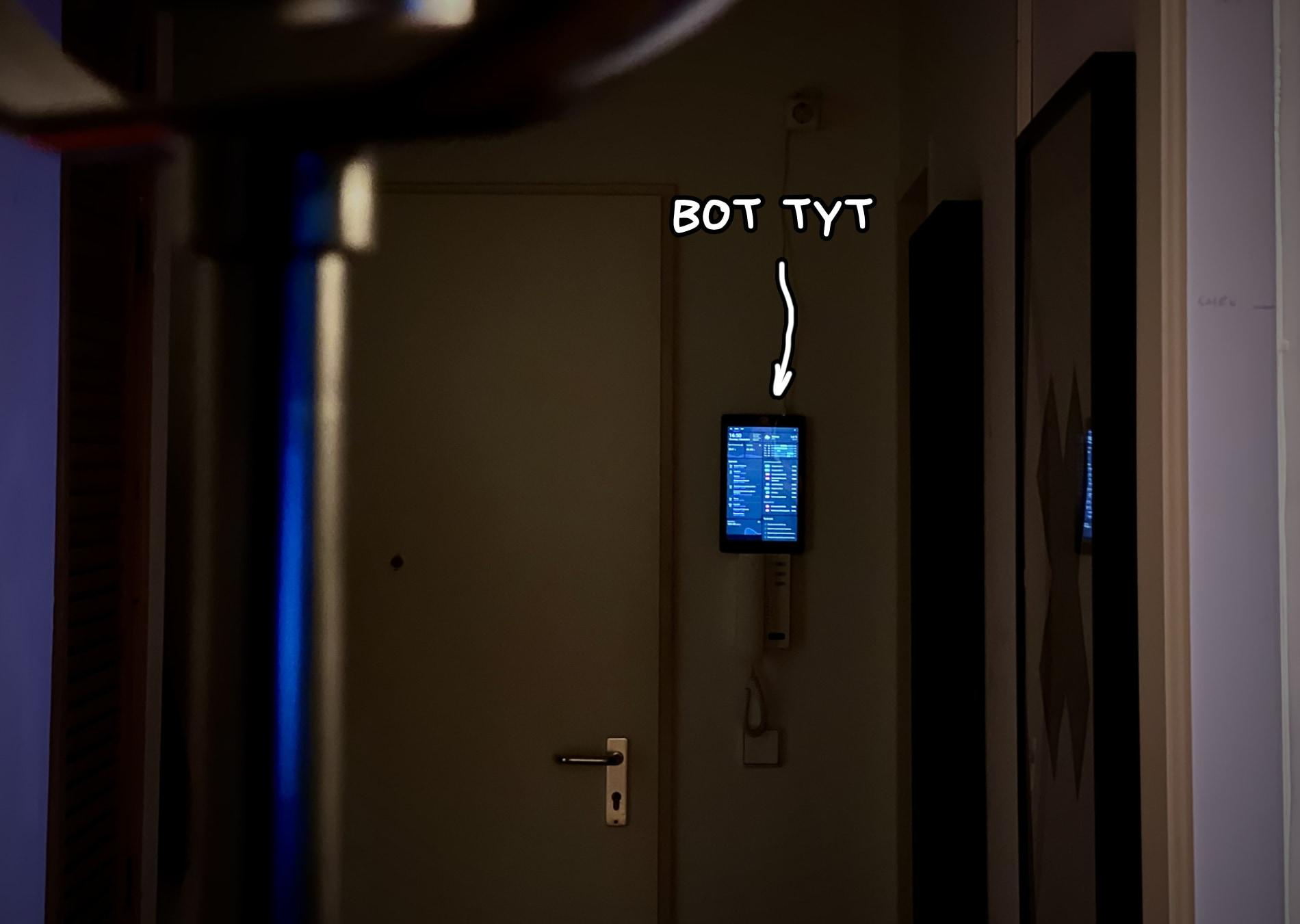

Recently I posted another photo of my wall-mounted tablet with a new widget, and lots of people asked me to explain how everything works.

Well, three years have passed, time for updates.

This Black Friday I got myself a compooter. The last time I used words like SATA and DDR in my vocabulary was about five years ago, and in recent years my "life" has been happening exclusively on a laptop or tablet. I'm that bearded hipster from Apple commercials.

But life without a real compooter is not a life at all. Although this "real" compooter could only partially be called that, because it looks like this:

This is a so-called Mini PC, which used to be mainly the domain of school computer labs, but now the bravest of them have even turned into Steam Decks that all gamers drool over.

I have a fairly modest model — MinisForum U820 for €350, but I chose it knowing that I don't need gaming or external graphics cards, just an energy-efficient machine to replace my Raspberry Pis.

One of my RPis was responsible for smart home stuff with Home Assistant — the same one I wrote about three years ago. Another RPi was for my home cloud-backup-fileserver, which had a RAID1 of 2x4TB HDDs with periodic backups of all my projects, tax calculator (yeah, I'm in Germany now) and just storage for all photos, documents and other hard-earned stuff. These two HDDs have moved with me through three countries in a row.

And when I needed a third RPi, I said enough. Time to get something proper, install a full Ubuntu on it and set up a home lab like in the finest homes of Europe.

I've been diligently migrating my home services to this little beast over the last few weeks, so it's a perfect time to document how everything works for posterity.

It's been three years since that post. Almost an eternity. Back then, I was building a smart home in my new apartment and believed in a bright future. Now I don't believe in anything and I've practically moved out.

Spoiler alert — almost everything got thrown out over these three years.

☠️ The Xiaomi Chinese devices zoo. As expected, these guys were the first to rot.

When they're new — you're in heaven. For five bucks you can monitor temperature and humidity in every room, and for another ten — automatically turn on the lights. Wow! Technology!

But after six months to a year, the temperature sensor's battery dies (which you can barely replace), and the motion sensor just dies from dust — you need to buy a new one and rewrite all the scripts.

While these might seem like minor issues at first glance, when everything falls apart for the fifth time — you just give up. I don't mind turning on lights manually anymore.

Yes, this was absolutely expected, and the Chinese junk from Xiaomi/Aqara was bought purely for experiments and jokes about crappy IoT. So no big loss.

I still occasionally find dead corpses of old sensors around the apartment and smile.

☠️ Universal hubs, smart switches, and other "scenarios". Any scenario more complex than "press button — light turns on" is doomed to die eventually. You'll just get tired of constantly programming new lights, triggers, changing scenes, and generally bothering with all this more than once.

So all "smart switches" were ripped off the walls and replaced with dumb ones.

A special kek happened with battery-powered sensors that were supposed to "smartly" adjust room temperature to comfortable levels during the day, lower it at night, and save heating when nobody's home.

Well. I got my bill for 2020 when sensors were everywhere, and for 2021 when I removed them. With "smart energy-saving sensors" I burned 20% more heating in a year than in the year with dumb knobs that just always stay on position one.

Great savings!

In the end, I'm left with only a set of IKEA buttons and sockets that don't replace regular switches but rather complement them — for when I'm too lazy to get up from the couch before bed, or for floor lamps that I want to control remotely.

But nothing more than that. Even the balcony Christmas lights were replaced with regular ones with their own timer. No difference whatsoever.

☠️ Remote control. The feature "turn off lights at home while you're away" has been useful exactly ZERO times.

Yes, I'm impressed by how Apple HomeKit tunnels all my stuff through any NAT and lets me monitor my home from anywhere through an Apple TV sleeping somewhere under the TV. That's cool. But it has never actually been useful. Sorry, Tim Cook.

✅ IKEA Smart Home. In the second year, the entire zoo of dongles and protocols was replaced with a single hub — from IKEA. This was a conscious choice.

First, IKEA made the most reasonable "ecosystem" by reusing open standards (ZigBee) and even contributing to open source.

Second, IKEA built their entire smart home without any "cloud" at all. It's an honest mesh network that can work even completely offline. Unplug the hub — all your switches and lights will still continue to work. True peer-to-peer. Finally, one of the damn corporations did it right.

Yes, IKEA often gets criticized for not having fancy features like Google Home. But that's precisely because implementing them requires all your data to be dumped into the cloud — and IKEA chose a different path. Spartan, but very commendable.

Just for this alone, respect to the Swedes, I'll happily watch their "smart" device lineup grow. Please don't give in and don't make IKEA Cloud. I'm begging you!

✅ Home Assistant. In the original post, I praised Home Assistant so much that I even said I follow their every changelog.

Such bright love usually fades quickly, but no. I still open and read their changelogs at least once every couple of months. Third year in a row!

However, over time Home Assistant stopped being a "universal hub" for me, I no longer control devices through it (although I can), I use it as a dashboard where all the readings and information I need are collected and monitored.

Home Assistant has one of the coolest and simplest architectures for widgets and components I've seen. Yes, they broke it once, which even got nicknamed The Great Migration, but everything has become even more beautiful since then. I even use it as an example for colleagues.

So it's still alive and legit, although it's essentially turned into a backend for the wall tablet. But at least before leaving home I always know if I'll catch the next tram and whether I need to wear a hat! (yes, mom)

✅ Tablet. The wall-mounted tablet is still so useful it deserves a separate story below.

I've had a cheap Amazon Fire HD tablet hanging near my front door for a while now. It cost something like 70 euros and shows one of my configured Home Assistant dashboards 24/7 through Fully Kiosk App.

And it's still the most useful thing in my entire dummy home. Even if I get bored and throw out all the smart devices, I'll keep the dashboard. I look at it several times a day and it actually saves time.

The tablet aggregates two types of information — "home" and "outside".

Home info lives in the left half. This includes:

On the right — everything "outside":

I had plans to add garbage collection schedules there too, but couldn't find the right APIs for Berlin.

No major technical issues. In three years nothing burned out, broke down, the battery is alive, electricity bills haven't bankrupted me. There's now a layer of dust as thick as a fingernail, but whatever, if it breaks — I'll buy a new one.

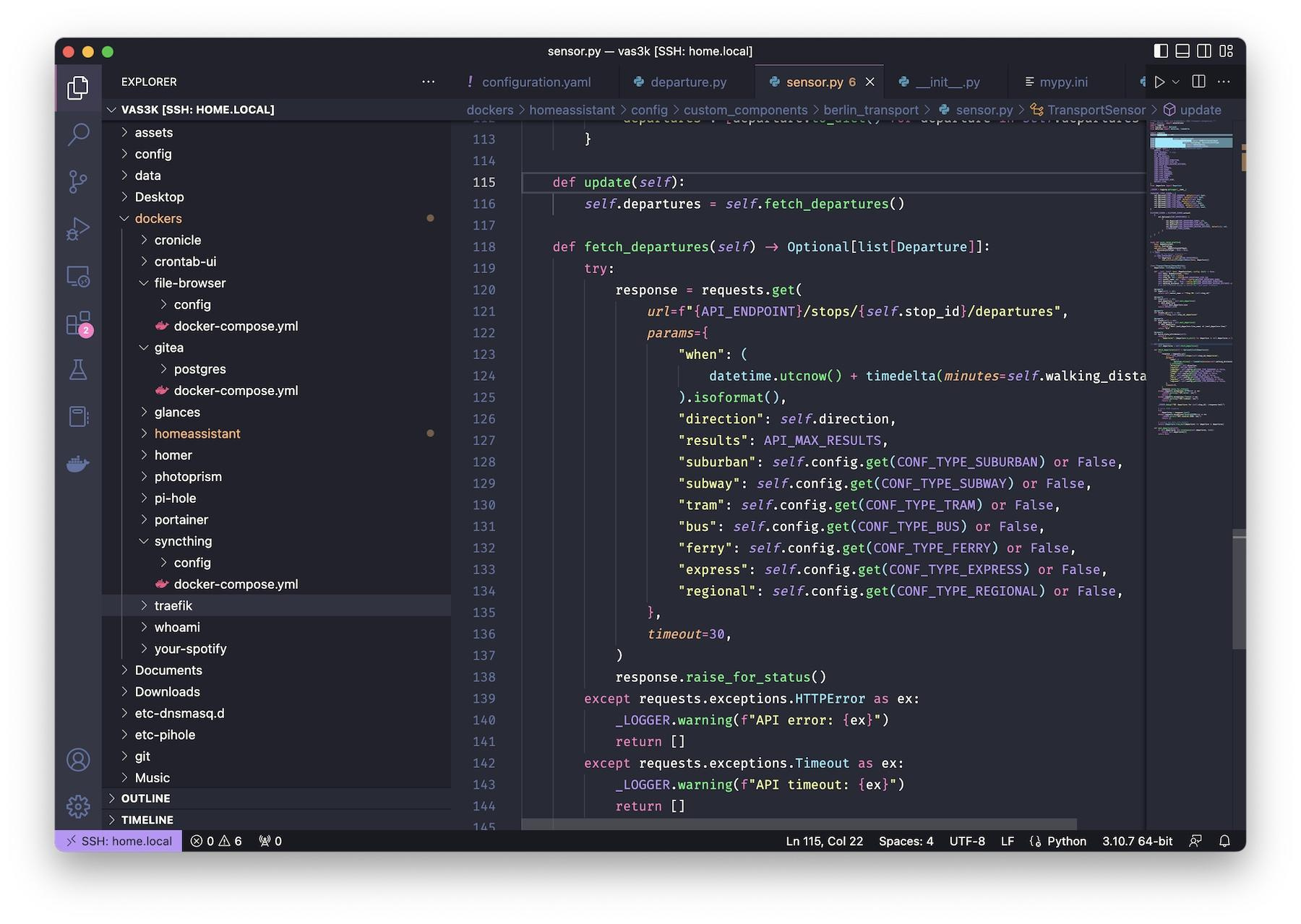

While moving all my stuff from Raspberry Pi, I noticed we've been missing a proper transport widget for a while. We used to have a tiny one that could only track transport from point A to point B. But now we need to see everything. So I decided to write a new one. Yes, again.

Here's the code: https://github.com/vas3k/home-assistant-berlin-transport

I think for the oldies this is becoming a meme — vas3k makes his own transport tracking in any city under any circumstances. Novosibirsk oldies still remember NGTMap — it was my first "startup" that I sold to a "big corporation" for 500 dollars back then! Was very proud of myself, lived on that for three months!

In recent years, I've started caring much more about my data and information security. Maybe it's techno-pessimistic Germany influencing me, maybe it's Berlin with its deep hacker culture, or maybe it's because Big Tech is currently rotting at a terrifying pace.

Good services that I've been using for ten years are getting bloated with tons of unnecessary features and rewriting their nice little apps into monstrously slow web crap. Product owners have arrived, damn it. "How likely are you to recommend our app to friends?" Would kill them.

Others are actively building digital gulags and walled gardens where they either trade your data or leak it left and right.

Now there's a third pain — constant blockages of everything. I'm afraid to check my email in the morning because there will be another letter from some GitHub, Spotify, or Hetzner saying "they're very sorry and can't continue working with me without explanation."

So I started gradually leaning toward "carry everything with me" and self-hosting for everything I consider truly important.

Dropbox was the first giant to fall. It rotted so badly that it was mercilessly replaced first with Nextcloud, and then with Syncthing because it's simple and stable.

Gmail was killed second. But honestly, there's no point in self-hosting email in 2020+ (your IP will get instantly banned by all mail providers), so it was replaced with an independent paid email service, doesn't matter which one, with history backups in Nextcloud.

Next up is replacing 1password with something like Bitwarden/Vaultwarden, but that's trickier because I'm stuck with 1password due to password sharing with lots of people, and there's no way around the "cloud" for now.

So over time, I plan to self-host more and more stuff at home. Yes, it requires time and skills, yes, self-hosting has its own dangers (recently I almost dropped the RAID array on the floor), but for me, the benefits still outweigh the drawbacks.

Gradually getting everyone at home onto this too.

Plus it's a nice hobby — you can practice technologies that you rarely use at work.

This part is for geeks, where I explain how everything is built inside.

Operating system — Ubuntu. I'm too old for hardcore stuff, though I really loved Arch back in the day. Ubuntu is installed with a full desktop since the computer is connected to the TV and even has Steam installed, in case I want to play my favorite indie game with a PS4 controller.

Next up, Docker. Docker is the foundation of everything now. Don't know how we lived without it. Running an application without Docker nowadays feels like going outside naked.

Modern OS's still love getting cluttered with packages, dependencies, cache remnants, and configs. In my youth, I thought I was just stupid and didn't understand anything, but even after 10+ years, I still don't know what to do with all this.

Here are pip packages, there are aptitude packages, here are snaps, and here some install script brought PHP and NodeJS for itself. That's why Docker is simpler for me: deploy a container with one command — test it — remove it — system stays clean and fresh.

Docker is awesome. though it's probably time to move to Podman, of course

So for any service I want to run on my server, I first google "name + docker-compose" and in 99% of cases, someone has already written a decent working config that will deploy with a single command — with all frontends, backends, databases, monitoring, etc.

Sure, I could go hardcore with Kubernetes and HELM charts like true home-labbers, but I don't see much point in orchestration when you only have one machine.

The simpler you make it — the longer it lives

When you have lots of containers with services, like 10+, problems start. You have to remember ports for each one, figure out where to store all configs, authentication — it all quickly gets tangled like cables in a drawer.

The simple solution is to use ready-made solutions like Umbrel, which abstract all this away and give you their kind of App Store. Works for most users.

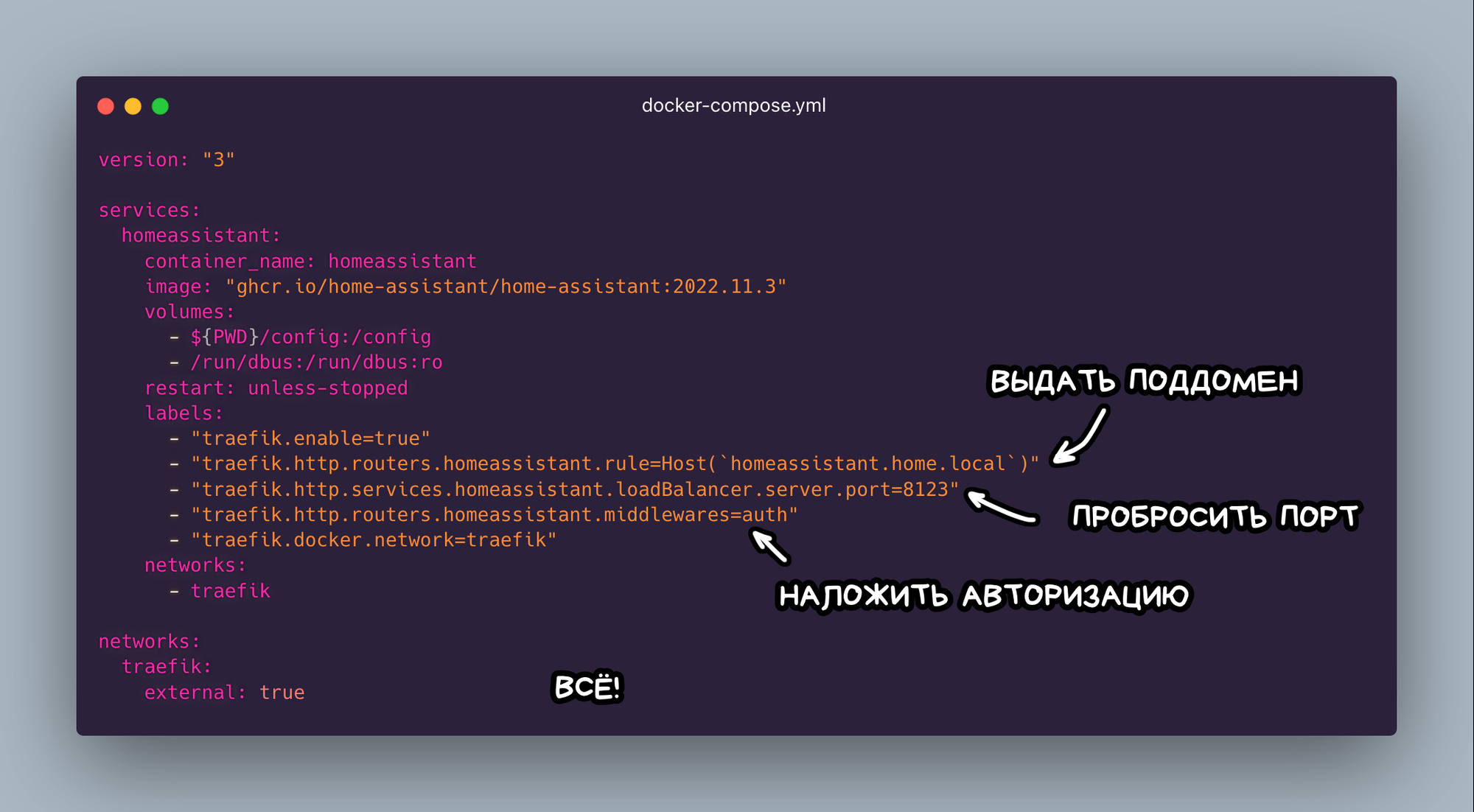

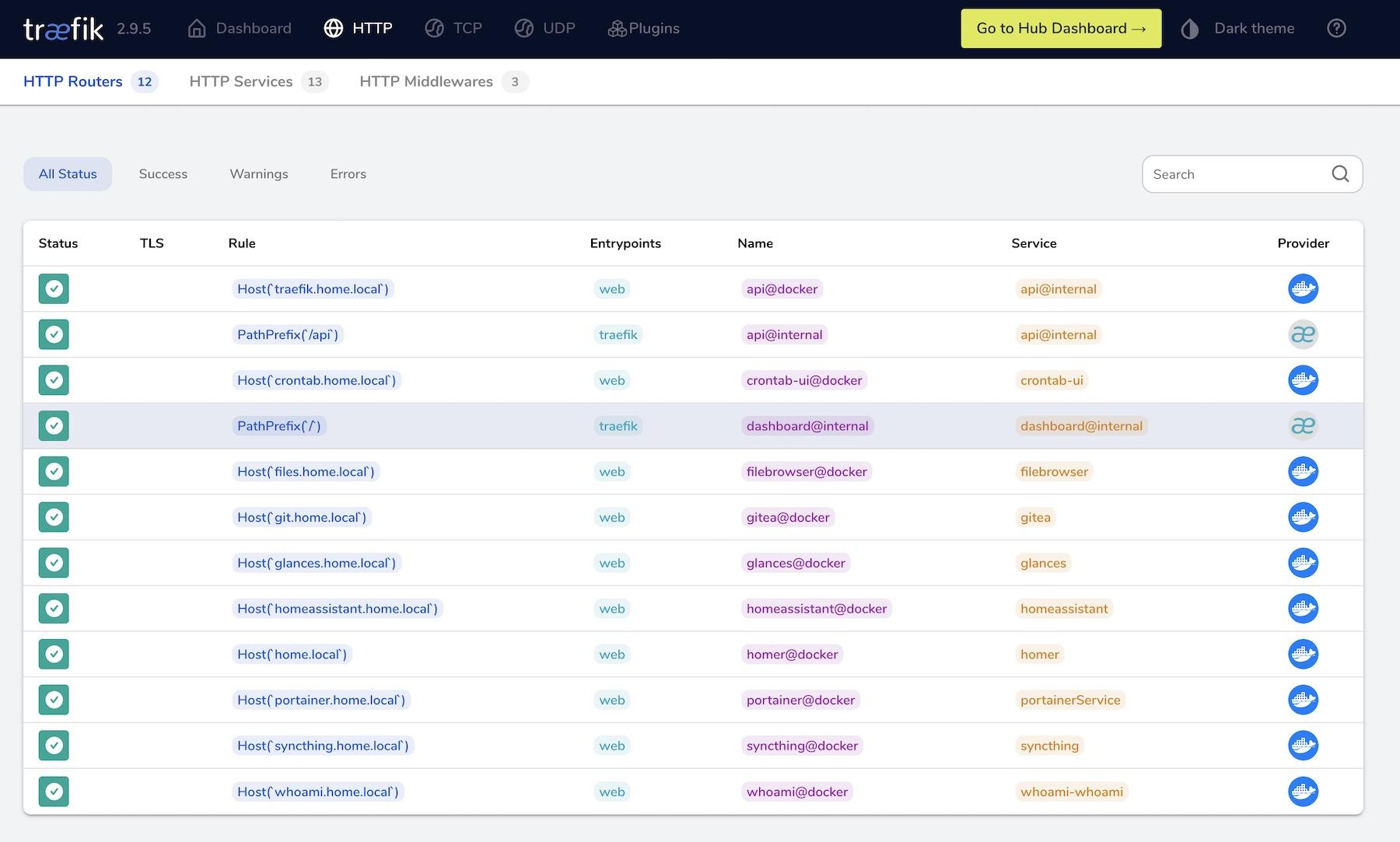

But if you plan to run many custom Docker containers — ready solutions can be a pain. Here comes good old Traefik, popular in Go circles, which nicely brings all this wealth together.

Traefik is a reverse proxy like Nginx, but written in this decade. It's already optimized for working with Docker by default, and also provides SSL, subnets, authentication, and many other useful plugins out of the box, which previously required long dances in Nginx and Let's Encrypt configs.

In the simplest case, to deploy a container on a new subdomain, you just need to add a couple of "labels" to your Docker Compose for Traefik to read and do all the magic automatically. You don't even need to restart it, everything is discovered automatically.

My home network isn't accessible from the internet, so I've skipped SSL for now. But that's bad, I'll definitely add it later.

Each service lives on its own local subdomain. home.local is the main page for everything (dashboard with links), followed by syncthing.home.local, homeassistant.home.local, gitea.home.local, stats.home.local, and so on.

Where do local domains come from? Good old avahi-daemon announces them all through Multicast DNS to your home network automatically. You don't even need to set up your own DNS server to have nice URLs. Works for guests too (if they don't have prehistoric devices).

On Ubuntu, it's literally included out of the box. Just open [your_hostname].local in your browser and behold. If not — just do sudo apt install avahi-daemon and it magically appears.

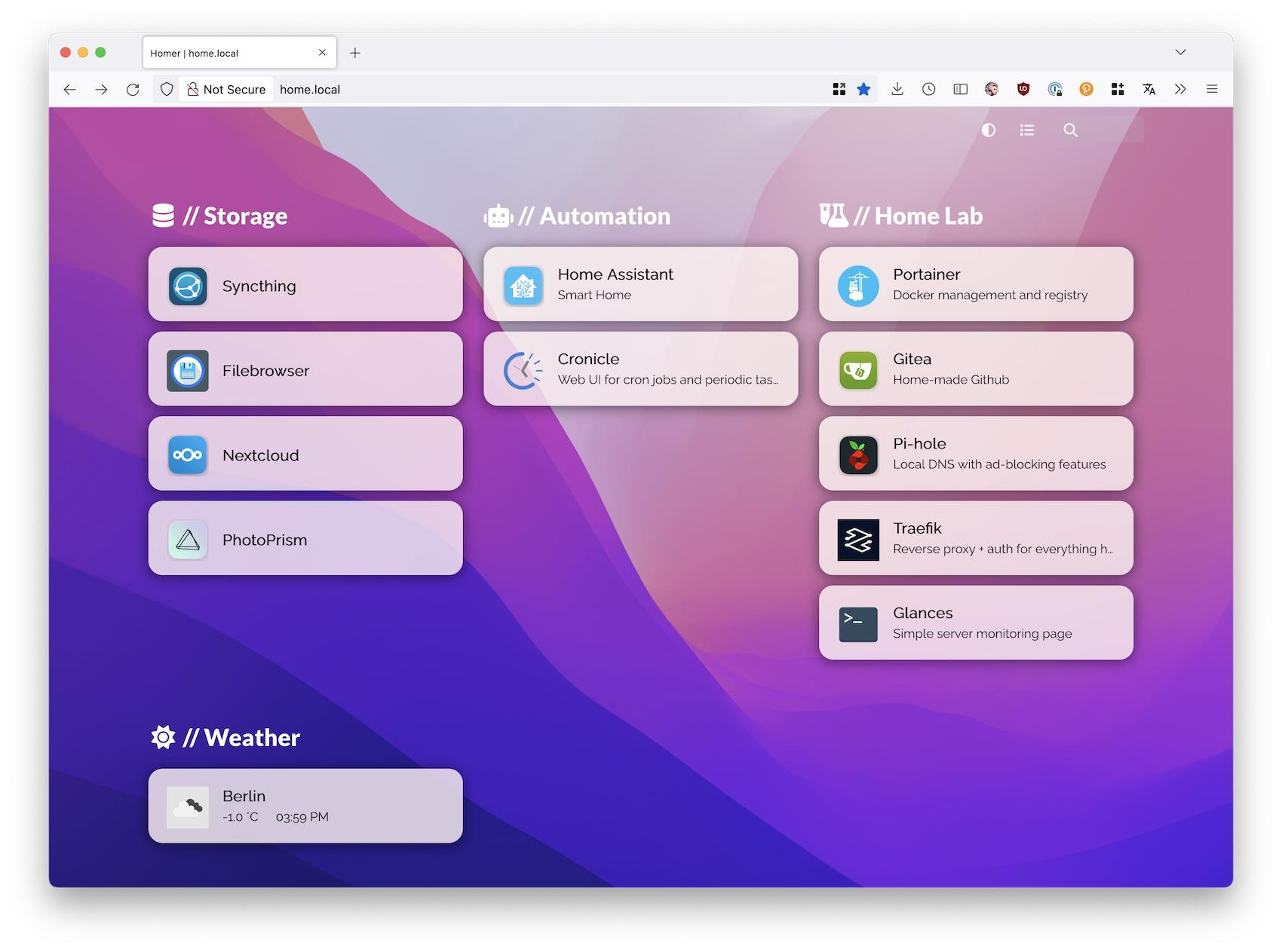

Next comes the choice of dashboard. You could keep all these links in bookmarks, but I prefer when I go to home.local and immediately click on the service I need. Traefik can even pass unified authentication headers to all services, so you don't have to enter login-password each time.

There are tons of dashboards on GitHub, I chose Homer with Homer v2 theme. It also deploys through docker-compose and becomes one of the services. Everything's universal, I like it.

Now you can deploy any services from the Awesome Selfhosted list and play like a kid with building blocks. My set is minimal for now:

Syncthing. One of my favorite applications ever. It's indestructible, does one thing and does it well for many years now — syncs files directly between machines without the cloud.

Filebrowser. Yes, that's literally its name. A lightweight file browser, helps when you need to "find your old contract in the document archive" or something like that. Can barely do anything else, but that's not needed.

Nextcloud. All-in-one combo — file storage, calendar, chat, it can even fetch your email. Used it actively before, but now I'm a bit tired of its monstrosity and prefer simpler solutions. However, Nextcloud's main advantage is still its mobile app — it's convenient for both viewing stuff on the server and backing up photos from your phone.

Home Assistant. Installed from Docker, so slightly limited in features. For example, can't install various internal apps — SSH terminal or HomeKit hub. But I actually prefer it this way, and I can install all that myself if needed.

Cronicle. I have lots of periodic cron tasks that someone needs to execute and monitor so they don't fail. Backups of all my sites, scripts grouping receipts into folders for taxes, and more. The main problem with regular cron tasks is they suddenly break and you only find out a month later.

Portainer. For me, it's just a convenient web admin for all Docker containers. Check logs, see which containers are running, where caches need cleaning, etc.

Gitea. Local GitHub, all my repos are mirrored there. Why? Well, when real GitHub finally bans me because I'm Russian Ivan (like they do with Iranians, for example) — then you'll understand why.

PhotoPrism. Your own Google/Apple Photos. They've even sprinkled some neural networks on it and it can find photos by simple queries like "car" or "budapest". I have a ritual — every Christmas I download all photos from the year from clouds in high-res, because we've all heard stories of how Google just compressed your photos into 10 levels of jpeg artifacts over time to save space. Now they're also automatically indexed by PhotoPrism and you can view them later with friends through the phone app.

Your Spotify. Your own Last.fm. Well, almost. Simple app that saves all your Spotify listening history and can show statistics like Spotify Wrapped but not once a year, rather once a month or week if you want. Pure entertainment. Plus local music history is always useful. Ah, made me miss Last.fm.

ArchiveBox. Your own Internet Archive (aka Wayback Machine). I haven't gone crazy about "archiving everything I've watched and read" yet, but there are enthusiasts in the community. Works like this: you install a plugin in your Firefox and it automatically saves EVERYTHING you browse on the internet to your server. Even if content was deleted (which happens often now) — you can always read it from your personal archive. HDDs are cheap anyway.

Pi-hole. Ad blocker and local DNS resolver. Haven't used it in a while, installed out of habit, but just in case!

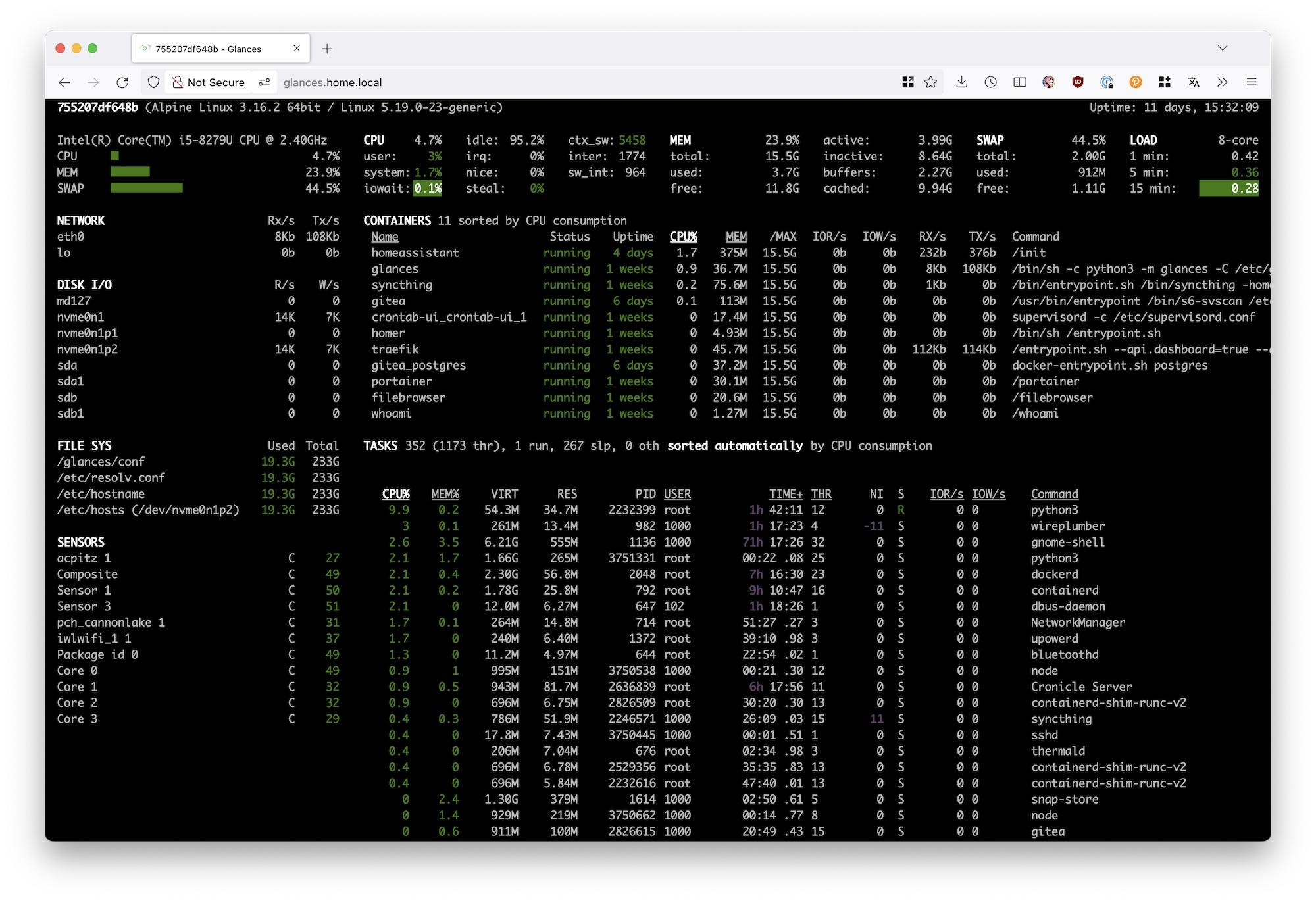

Glances. Maximally simple and convenient server monitoring page. Just basically htop + docker ps + df -h plus a couple of standard commands displayed in one place. I like it. Even changed my mind about installing the standard Prometheus + Grafana stack since this is enough. But might still install just for statistics.

🏴☠️ My list doesn't include popular services like Plex, Transmission and Jellyfin, which are awesome for building your own Netflix with torrents. This is again because of Germany, where torrents are banned. But I highly recommend them to you! Take revenge there for my pain.

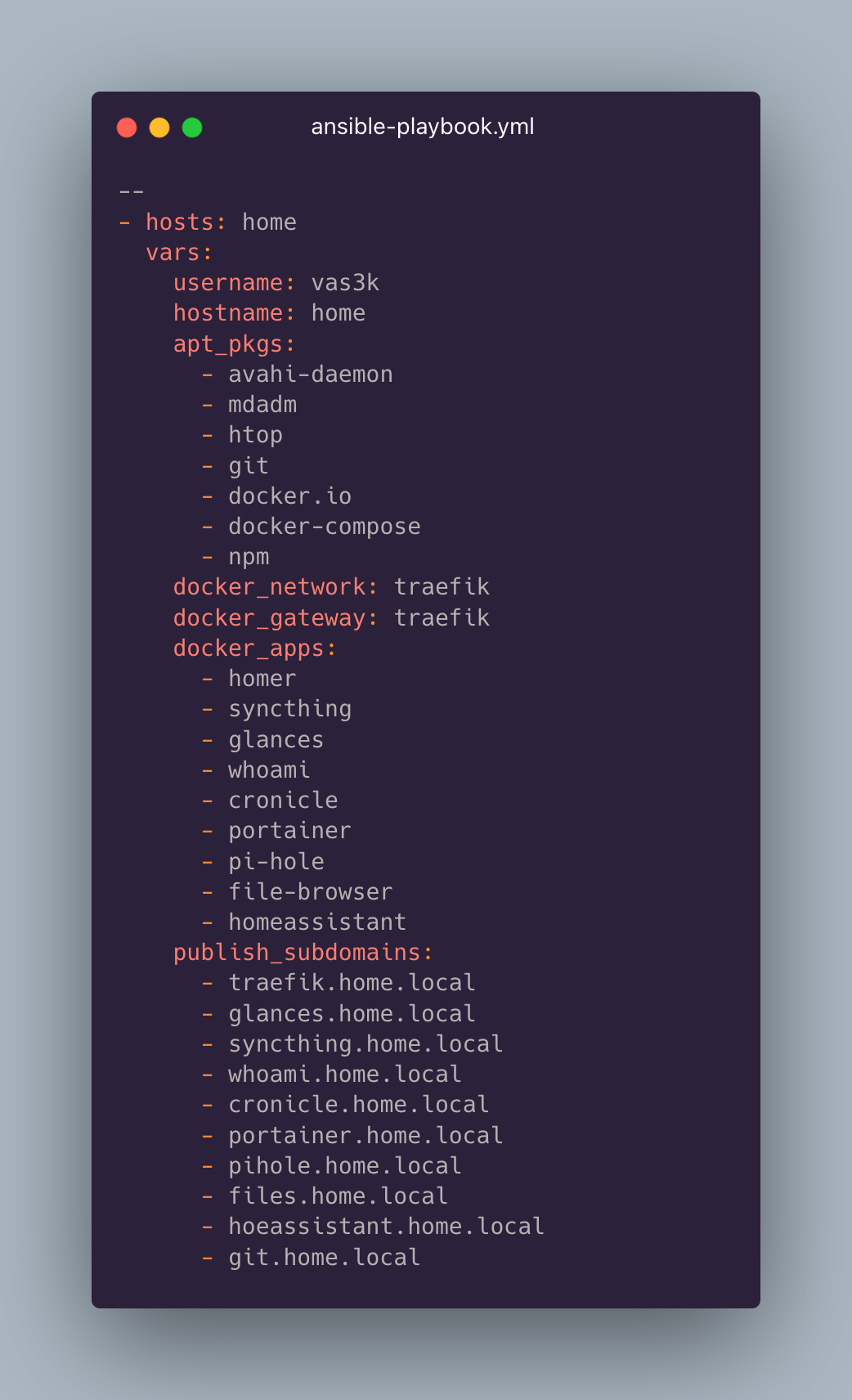

The last question — how all this is stored and configured. For this, I have a local git repo with all configs and good old Ansible. Yes-yes, it's still alive. They've finally even rewritten it from Python 2. Only took them ten years.

Ansible is used to deploy my entire setup from scratch. I don't set up new servers that often and each time I have to remember "what else needed to be installed." Now it's all just written in a playbook, at the end of which Ansible simply copies the entire repo to the host and does docker-compose up -d for each service. Beautiful.

Just need to remember to commit config changes to git from time to time. When you change something through UI — future generations won't know about it.

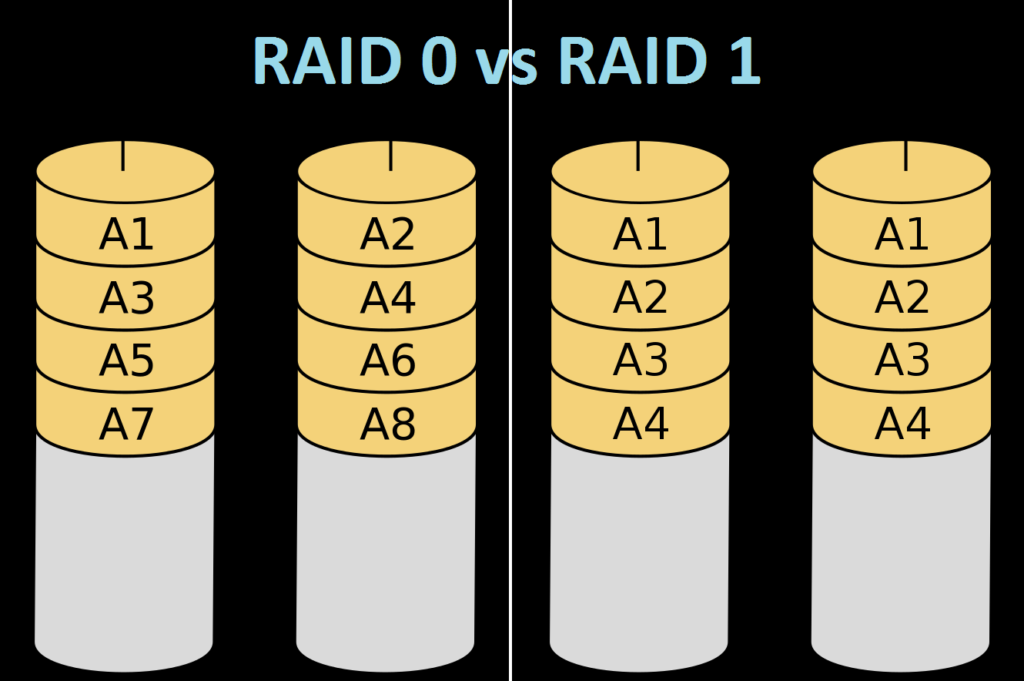

In childhood, this wasn't an issue. There wasn't much data, and Linux systems were reinstalled every couple of months. With years, or in my case decades, you start noticing how fragile HDDs are, even if you buy special WD Red for NAS.

One morning you wake up, pour yourself coffee, and with your left ear hear a crackling sound from under the TV — one of your HDDs died.

You go and buy twice as many disks and set up RAID1 on them. Or if you already have lots of disks of different sizes — you set up ZFS like it's trendy. Well, and if you're broke — you just set up cron+rsync of all important folders to another disk the old-fashioned way.

Each approach has its pros and cons. Choose yourself.

Linux has beautifully simple mdadm, allowing you to literally build a software RAID array in two commands, and rebuild it in case of degradation.

Mdadm lets you juggle disks like hot cakes, though it's always scary, of course. So now I decided to try a slightly more oldschool method — rsync all data from disk to disk once a week and monitor it separately. This also works if you care about having backups rather than high availability.

But for beginners, this is still optional. Ideally, it's better to backup everything to the cloud, because even the best home RAID array can be physically knocked off a shelf onto the floor and lose everything. Wouldn't want that.

And the cherry on top and the highest tech of modern self-hosting is considered to be Cloudflare Tunnel. I don't know why I need it yet, but I really want it.

You can essentially keep your server only in your home network (behind NAT), but install a special daemon that establishes a private encrypted tunnel with Cloudflare and then can access all your home services from anywhere on the internet through it.

Now people with public IP addresses are like "uhh, what's the difference???", but I live in a country where having a public IP at home is like having a Porsche (and there are more of the latter), so people here are really excited about Cloudflare Tunnel.

Instead of a conclusion, I'll drop some links to make the process of getting into this whole self-hosting and automation story easier.