Vastrik

Vastrik

Last week I was in France again. When you're in France, your main goal is EATING. Because in France, EATING is the best in the world.

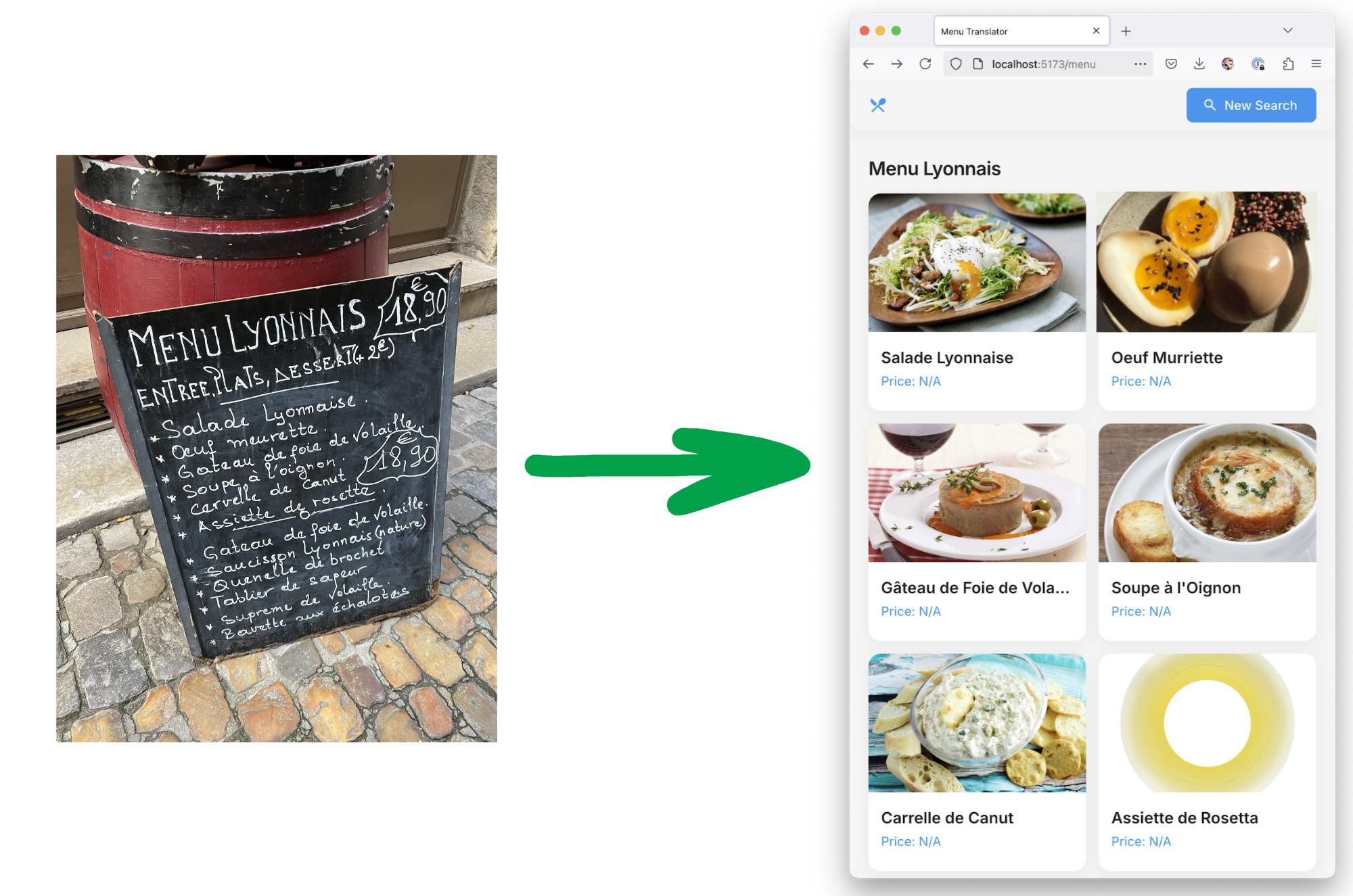

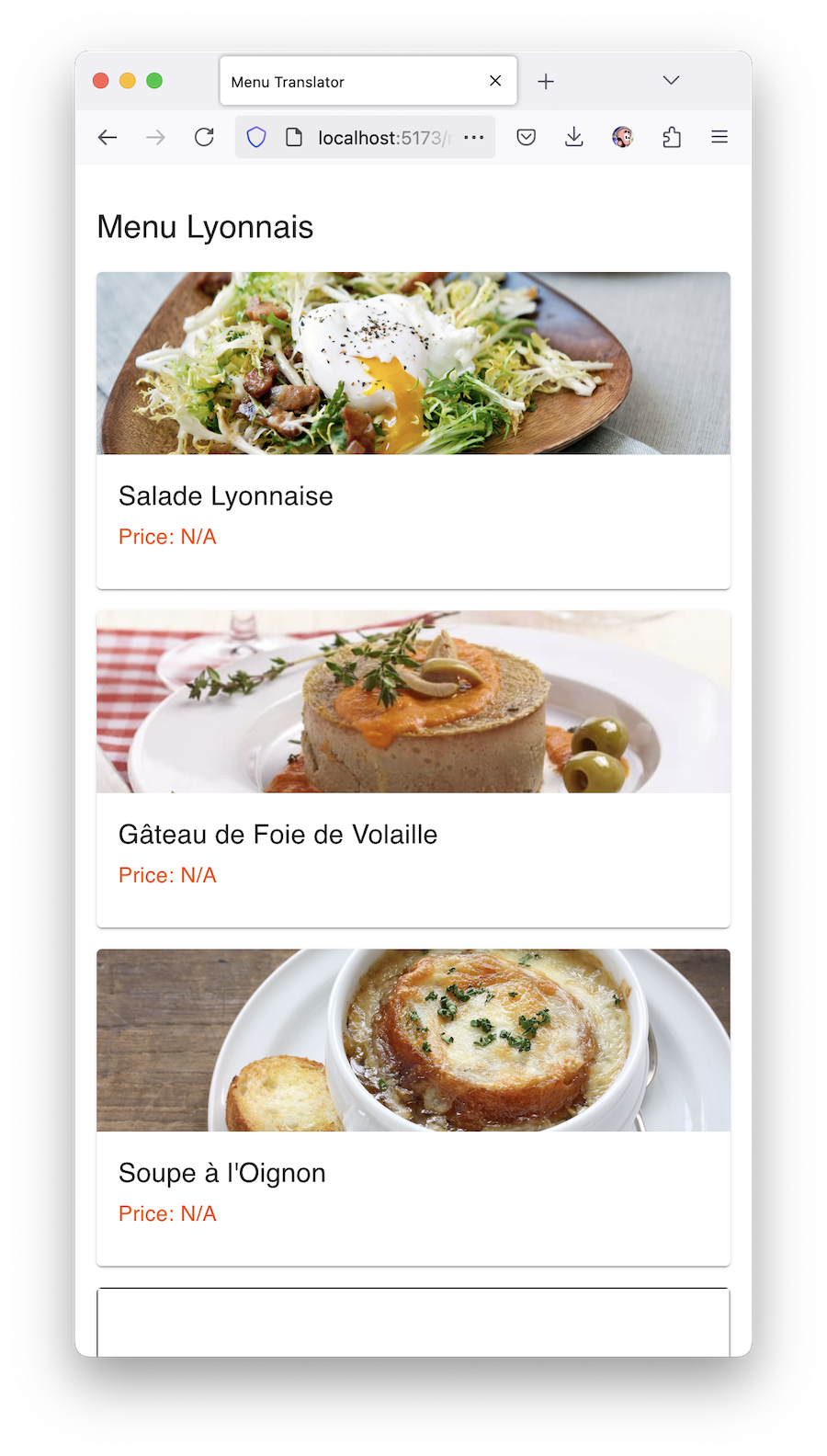

But there's one problem: if you're not going to some tourist trap but to an authentic restaurant in any French city, at best (when the owner has good handwriting and woke up in a good mood) their menu will look something like this:

For those who haven't been to France, let me clarify: even when you ask for a "menu", they don't give you a pretty book with pictures like you're used to. They literally put this black board on your table with daily dishes written in white chalk (in French). S'il vous plaît, monsieur! Du vin pour les dames?

Yeah, we're usually lucky to have French-speaking friends with us. But sometimes there are situations when you want EATING, but no friends are around.

That's how I came up with a startup idea a while ago.

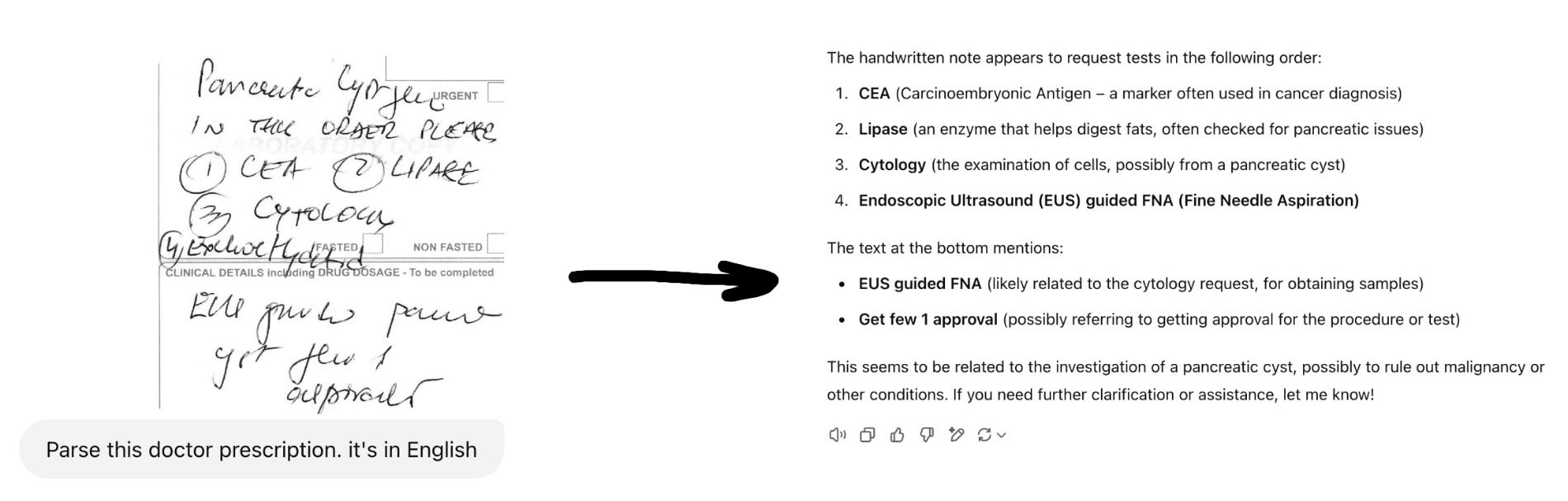

ChatGPT (and its alternatives) has an interesting feature — they're surprisingly good at recognizing handwritten text. Even if it's some doctor's scribbles or a police report in Serbian (don't ask how I know this). They're way ahead of any old OCR apps.

So during my last trip to France, I had this absolutely stupid yet genius idea:

I need to make an app where I take a photo of a restaurant menu, and it returns me a list of dishes with descriptions and photos of what they actually look like. And ratings... And...

Am I sure that there are at least a couple hundred thousand such apps in the App Store already? Of course! Do I care? Nope))00)

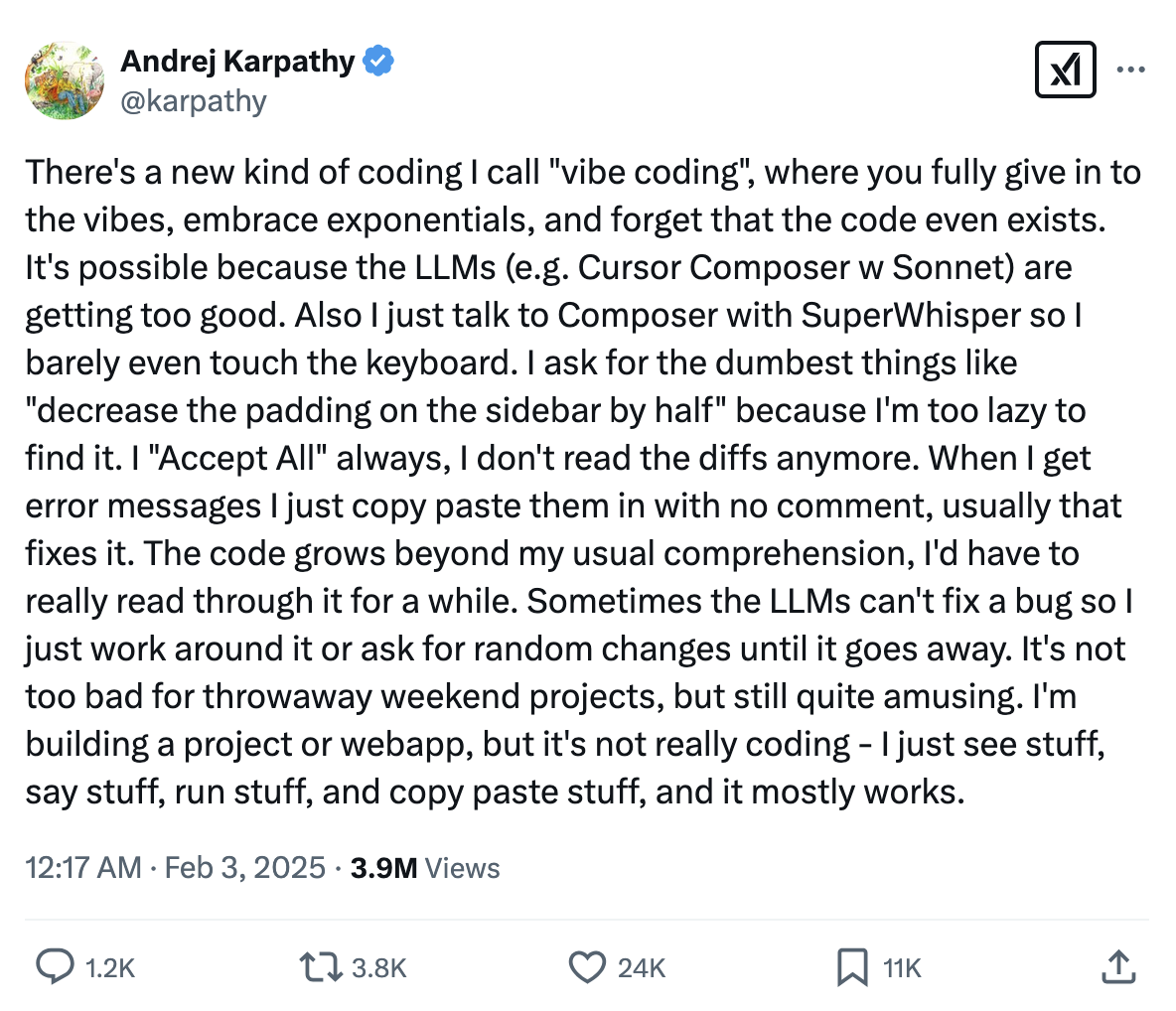

And this is where we get to the actual topic of this post — vibe coding!

I don't know who came up with the term vibe coding, but it became popular after Andrej Karpathy's tweet couple of weeks ago:

The idea of vibe coding is that with the emergence of LLM code generation tools and even entire projects, like Claude Sonnet or Cursor, hobby programmers who like to code something in the evening have discovered a new way of programming — by chatting with LLMs or even by voice.

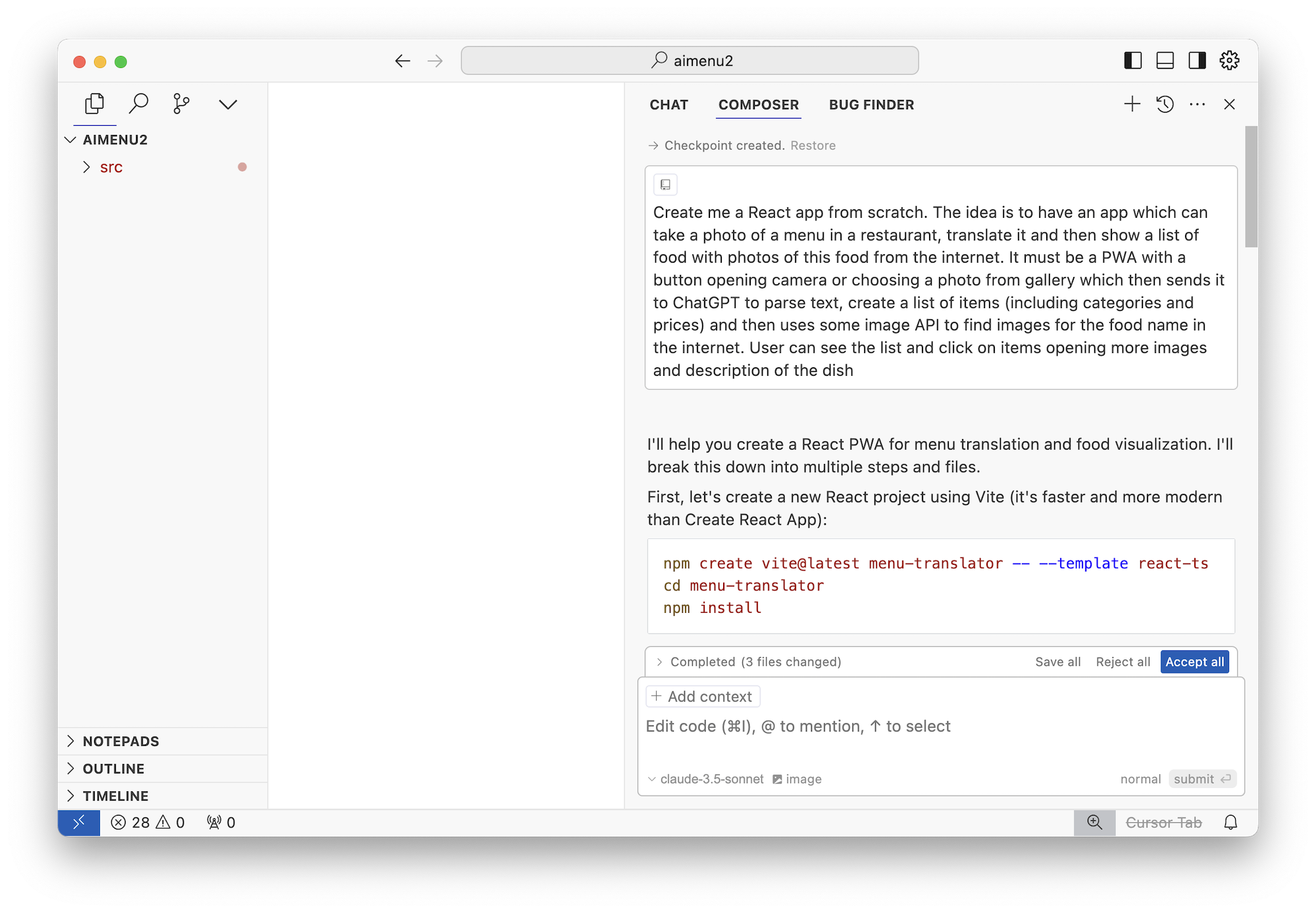

You open Cursor or any other AI IDE, create a clean project, and start writing messages in the chat about what you want to get.

For example, I started with this prompt:

Create me a React app from scratch. The idea is to have an app which can take a photo of a menu in a restaurant, translate it and then show a list of food with photos of this food from the internet. It must be a PWA with a button opening camera or choosing a photo from gallery which then sends it to ChatGPT to parse text, create a list of items (including categories and prices) and then uses some image API to find images for the food name in the internet. User can see the list and click on items opening more images and description of the dish

Then I send my prompt to Le Chat Sonnet...

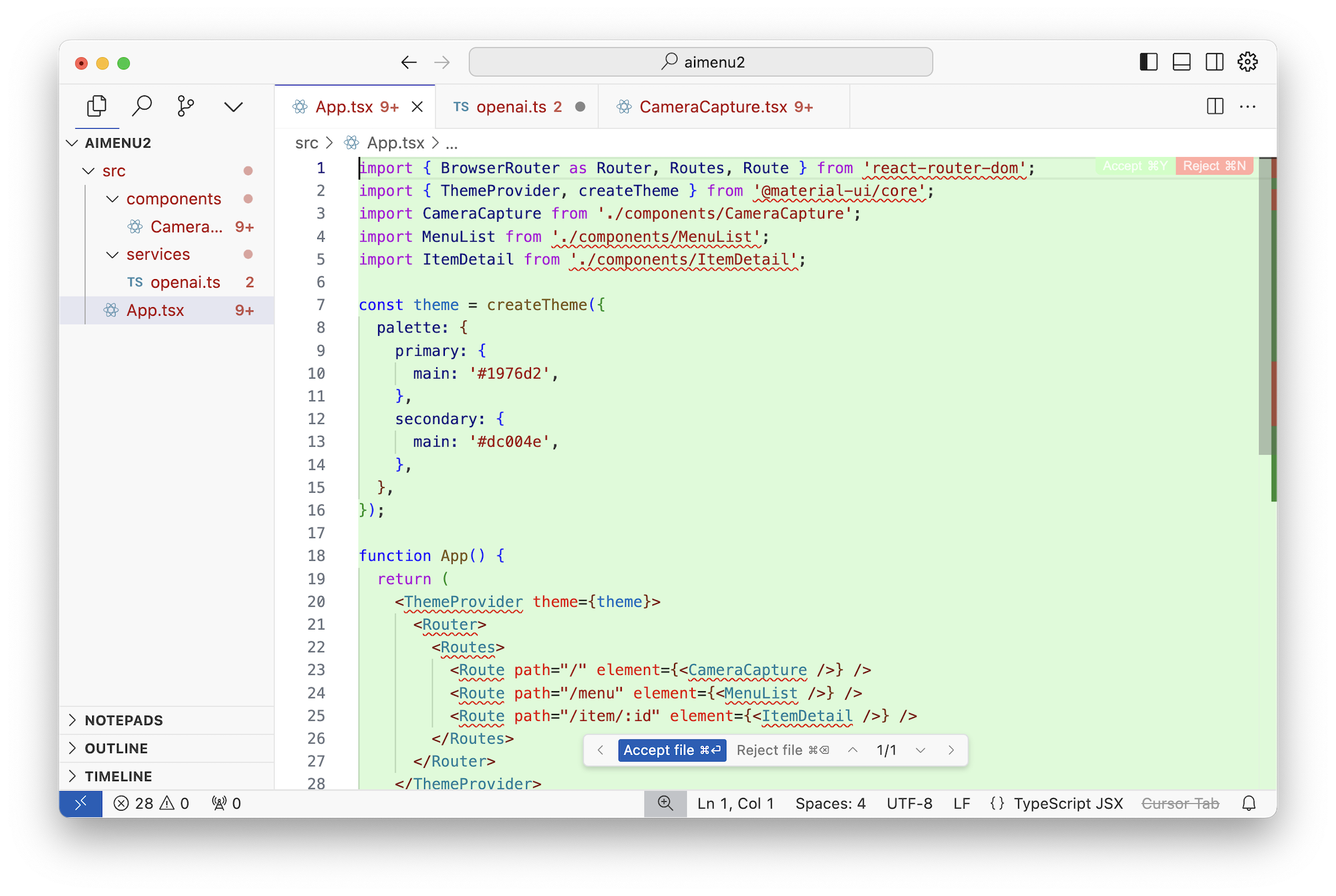

And the GenAI magic magically generates me...

a total crap.

The project structure is awful, everything is crammed into one component, it calls OpenAI API through REST instead of using the standard library, no screen separation, no progress indicators, hundreds of requests running back and forth without caching, basically looks like a junior coder wrote it. No sane person could use this.

Though I like that it chose React + Vite + MUI. Even though I'm a backend guy, the frontend bros in the hood told me this is a gentleman's choice for prototypes.

And it actually RUNS!

If you do npm create/install as it asks, then npm run dev will actually launch you an (almost) working project, where you'll just need to manually change a couple of API keys in the config and update to modern OpenAI model versions.

Overall, it works.

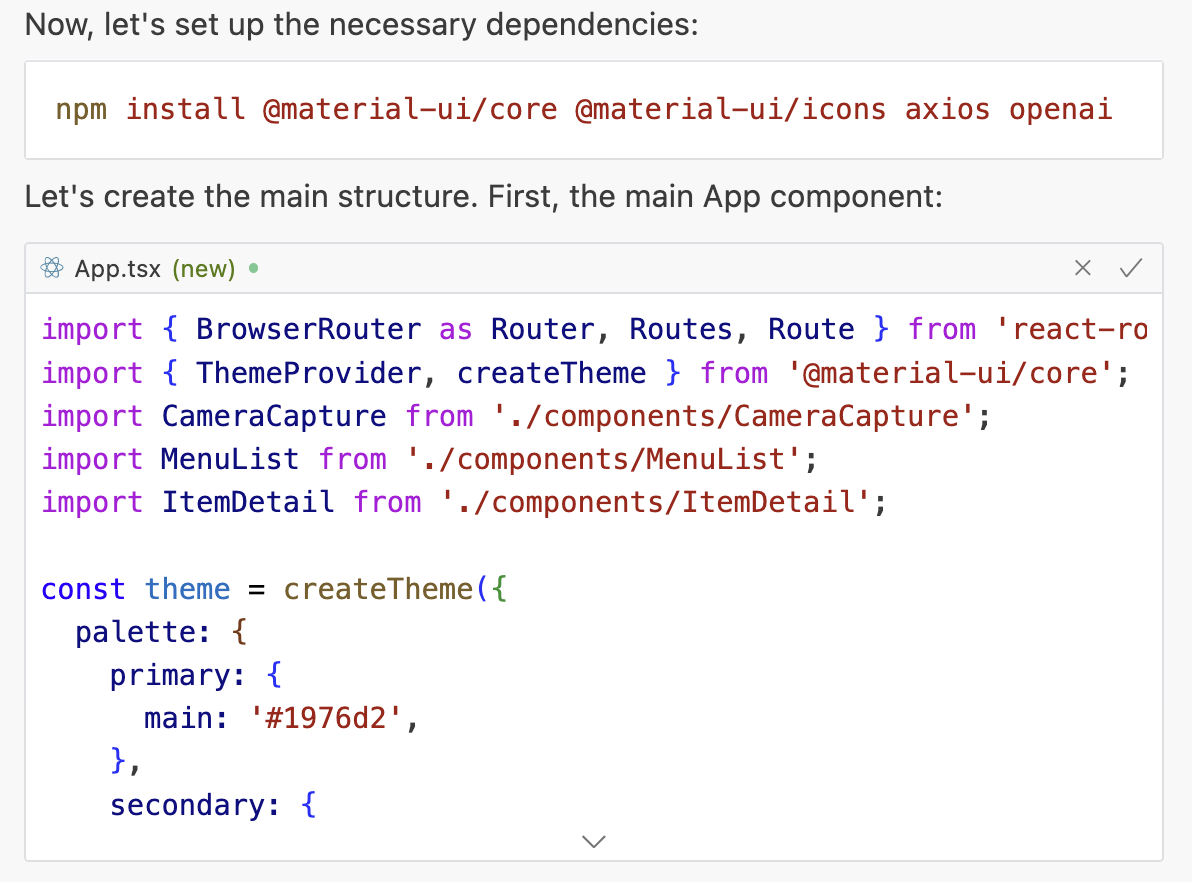

But now you understand that you need to talk to LLMs not like a senior to a senior, but like a senior to a junior. You start formulating your thoughts more specifically.

Every screen, every button, every layout, button sizes and icons on each screen, menu position, back buttons, and even framework choice — everything must be mentioned and described by you.

It's like writing a JIRA ticket for a VERY dumb developer. Except the result appears immediately, not after a couple months of "quick-calls" and CSS framework selection meetings.

You can't demand complex things from LLM right away. Otherwise, it will get tired and break halfway through (just like a real programmer). You need to come up with and describe that very MVP, so that the result of the first iteration is simultaneously as simple and basic as possible, but also launches, works, and solves its main task.

In short, now you're like a product manager for LLM. Welcome! I hope real product managers haven't realized yet that they don't need programmers anymore...

I delete the project, create a new one, and write the following prompt to Claude 3.5 Sonnet:

Let the vibe coding begin!

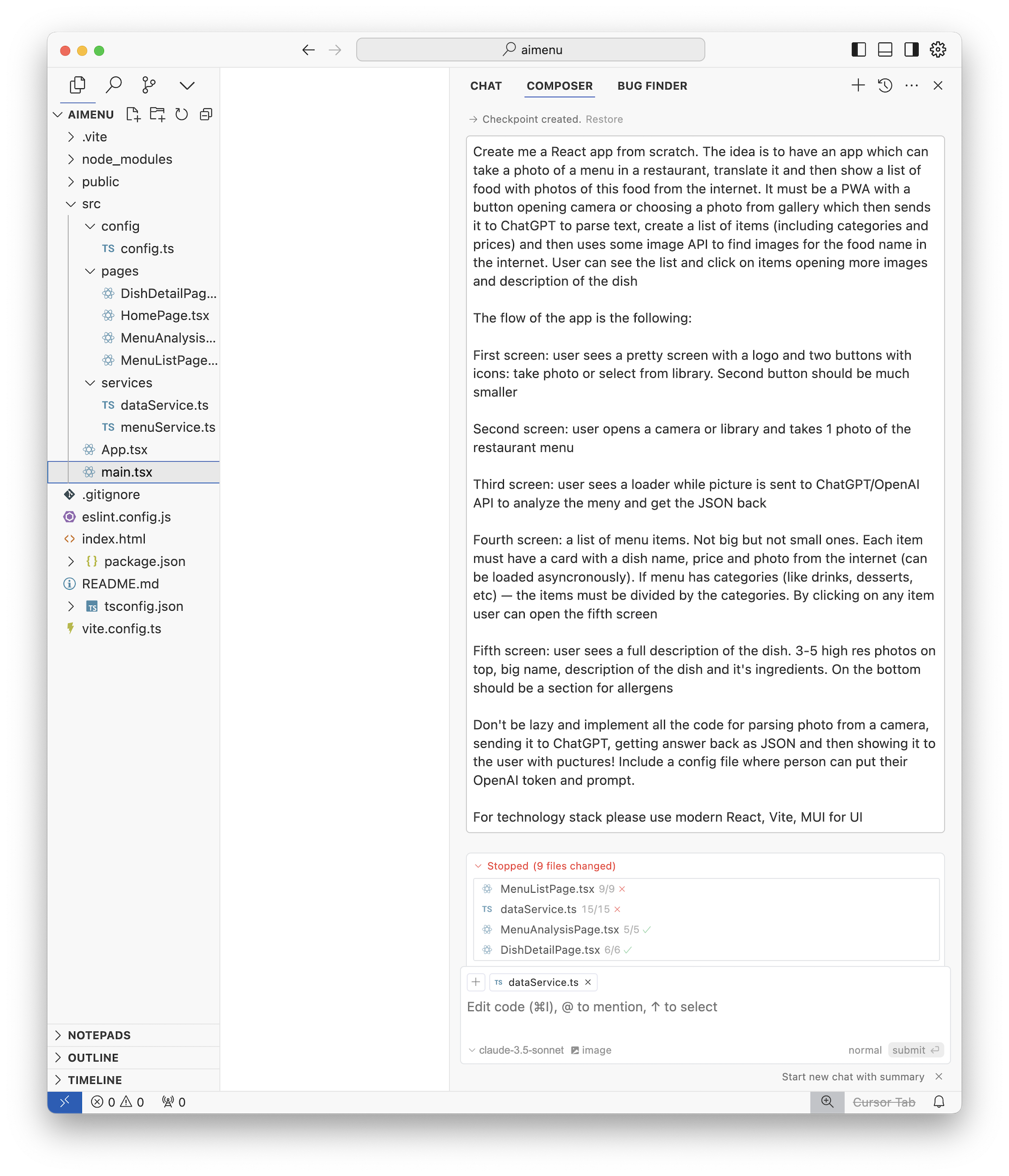

Okay, this time it generated what looks like a decent app structure at first glance. Four screens, loading indicators, URL routing, it even created a separate model-service that handles menu business logic. Looks like that "Become a Web Developer in 13 Days" is passed. Plus vibe!

📝 Note: when I was writing this post and running my prompts again to make screenshots — they gave me different results each time, sometimes even resulting in a non-working project. So if you can't replicate it — just modify the request and keep fighting until victory!

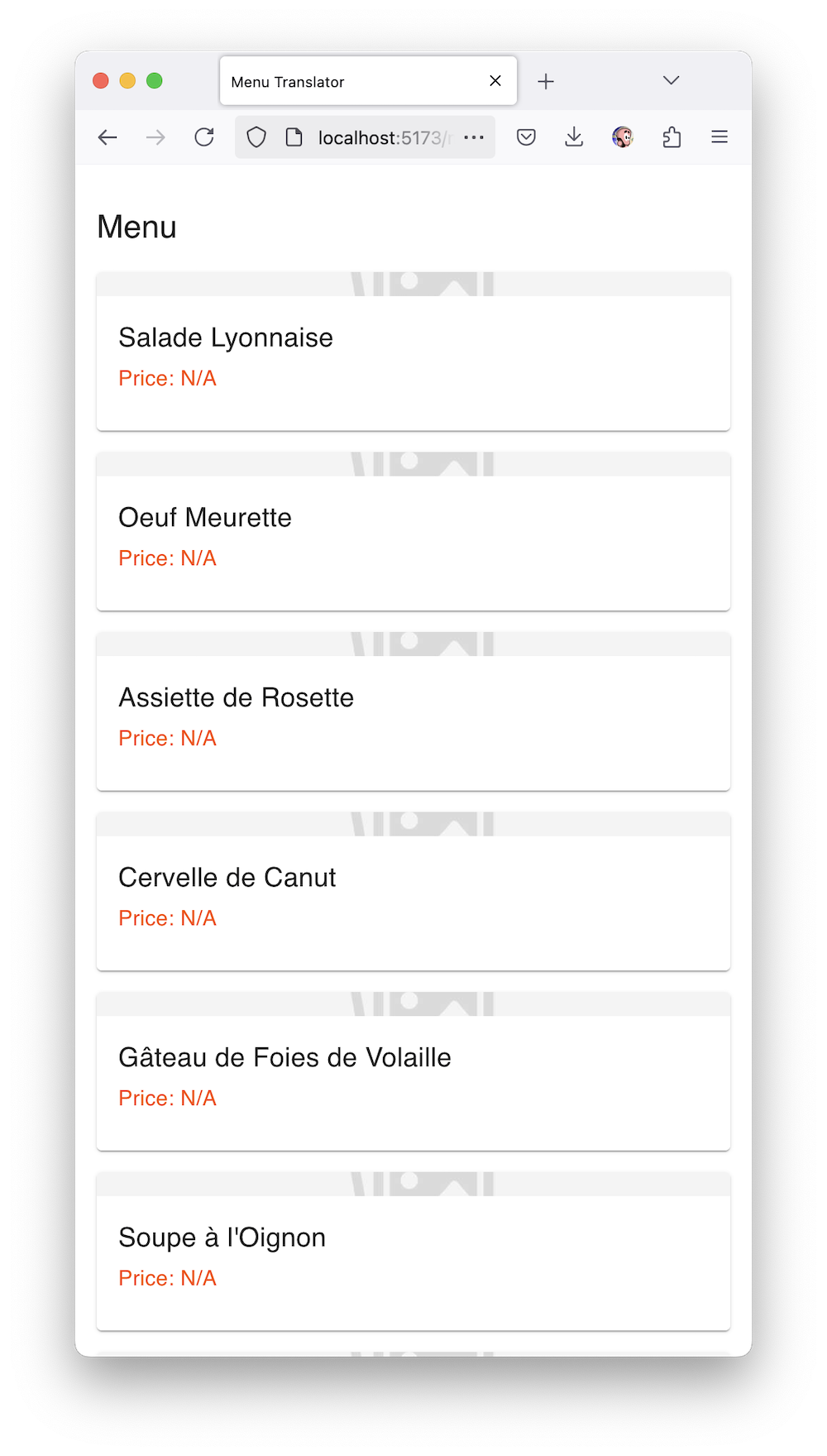

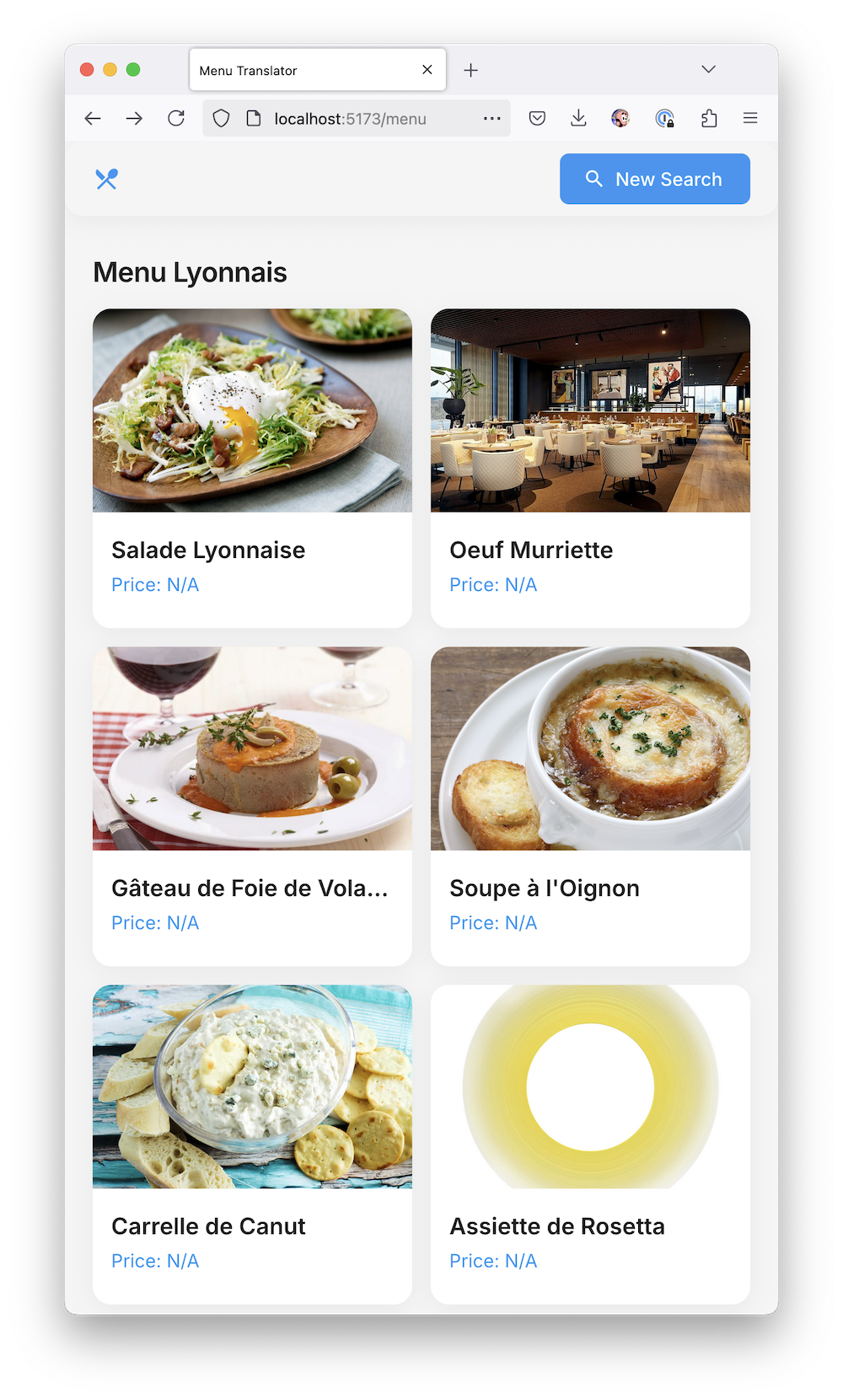

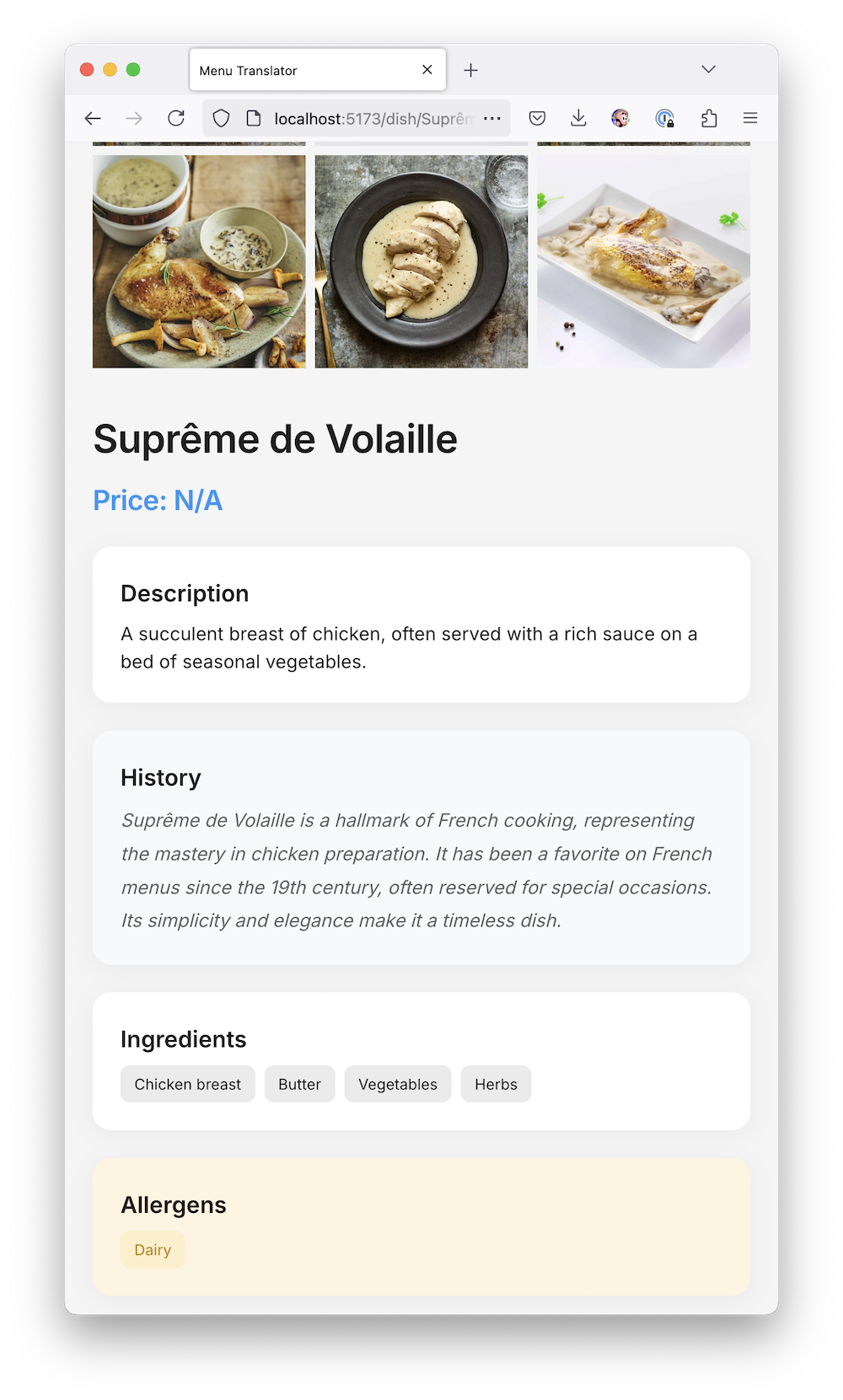

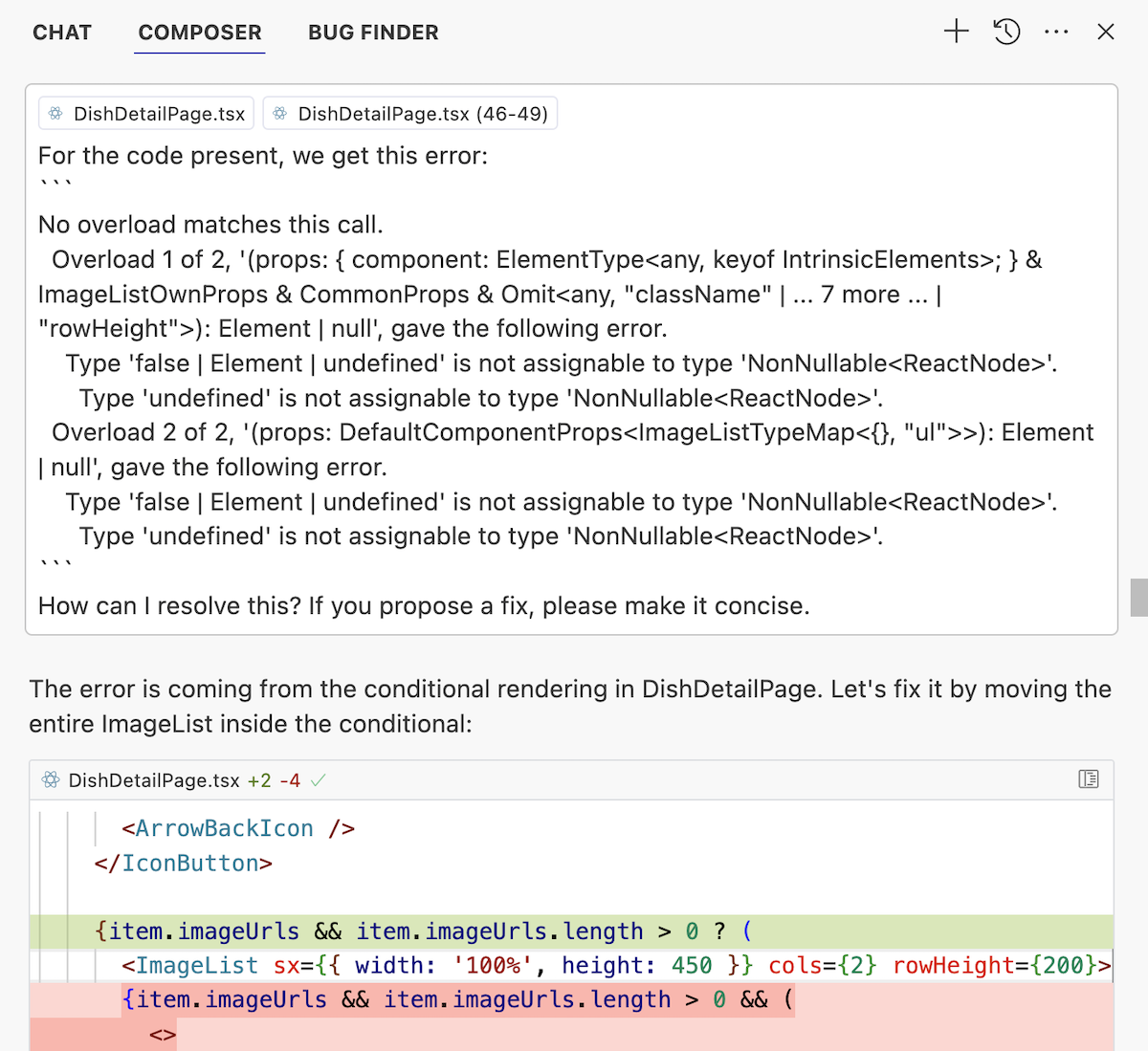

On the first screen, you can select a menu photo from the gallery or open the camera, on the second one analysis happens with a beautiful Material UI loader, and on the third one it shows the menu itself with food images.

I also generated the app icon using DALL-E, asking to add some French vibe to it. It turned out to be cheap crap, but fits the vibe. The mustache came out nice though.

The first thing that catches the eye — the prompt is very poorly written. The result doesn't even parse because ChatGPT returns a text response in Markdown instead of JSON. But this was expected, LLMs are still bad at writing prompts for other LLMs.

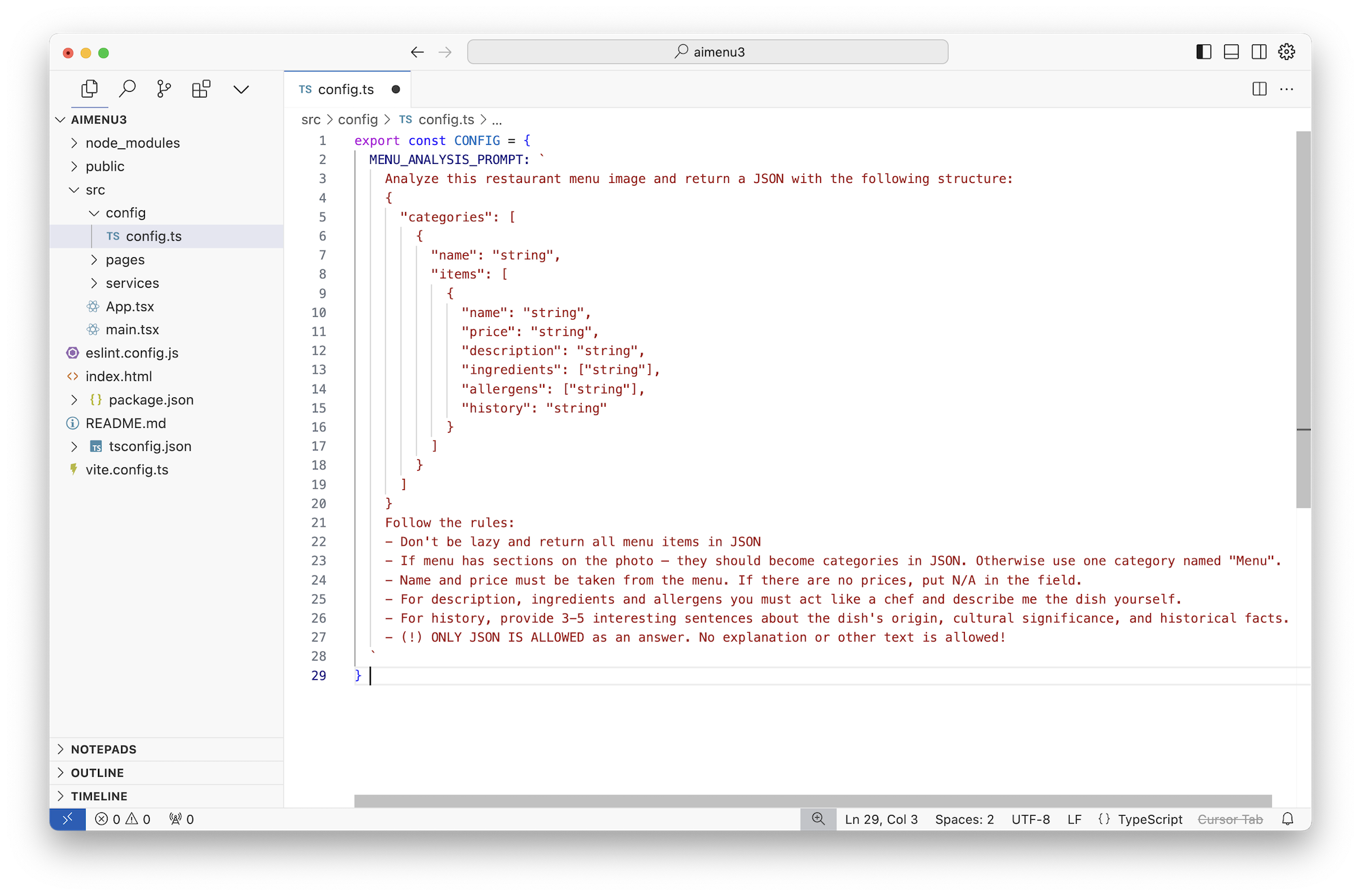

So we'll fix the prompt manually, using all our modest knowledge about Prompt Engineering from other articles. Something like this:

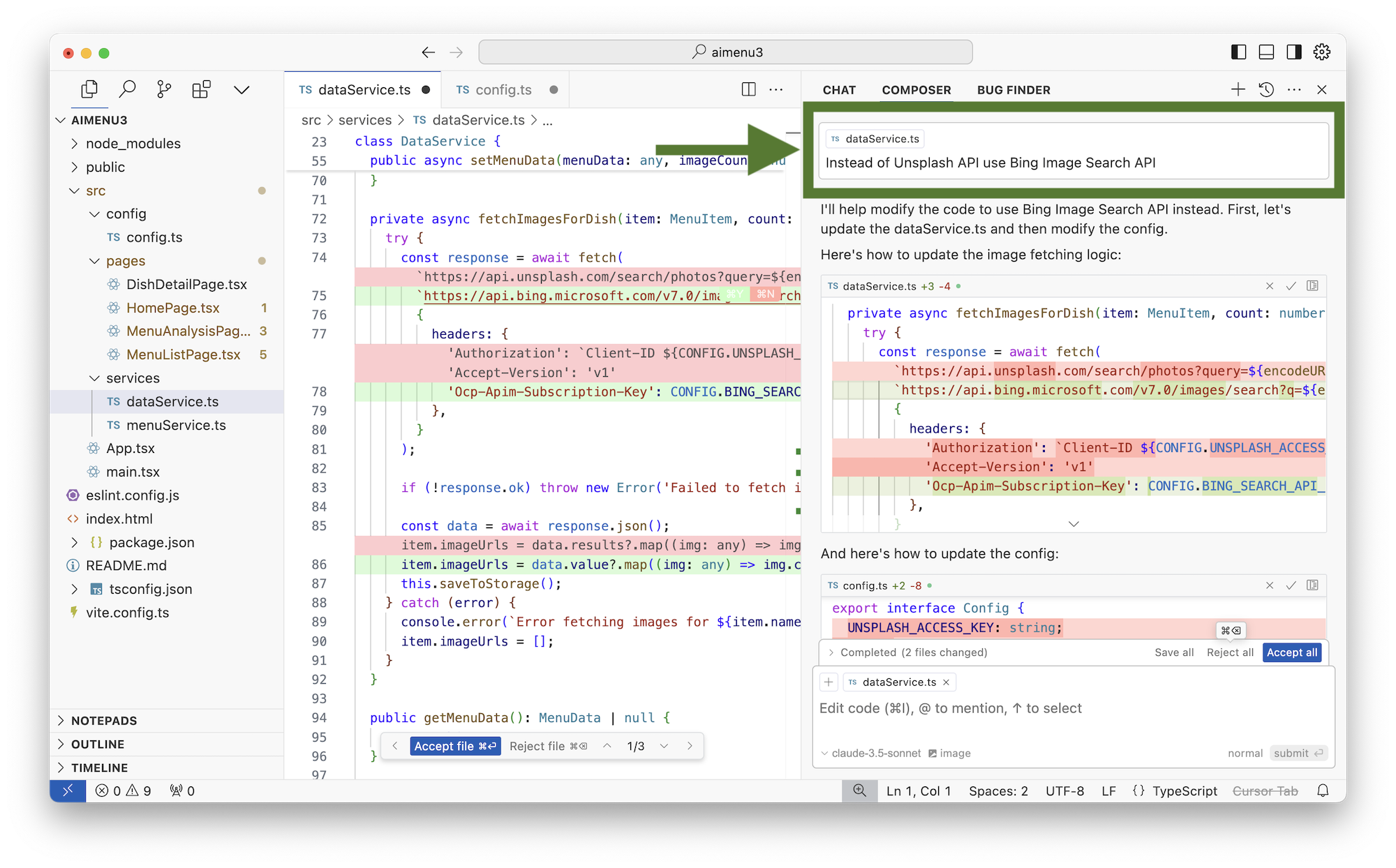

The second thing I didn't like — it decided to use Unsplash for image search. Unsplash is good, but it's not great for our use case because it's mostly stock images, and we want real ones like in Google search. But I heard Google charges a lot for their API, maybe we should try Bing?

That's exactly what we write to it:

The code worked, but we need to get a Bing Search API key somewhere. For this, I had to register with Microsoft Azure, go through 8 circles of verification, enter all credit card data, upload a photo of my dog, dirty underwear, and mortgage my house.

Even despite all this, I got nothing. Azure just crashed with random errors in the console and didn't even let me create a test API key for image search. Unfortunately, LLMs can't help us with this issue yet — that's minus vibe.

Let's just forget about it. Microsoft is doing crappy Microsoft stuff as usual. Azure's interface is disgusting.

We ditch Bing, ask Cursor to rewrite everything for Google API. At least it didn't crash with errors and gave me a test key for 10K requests. The images started working.

To check the vibe, let's address a simpler problem.

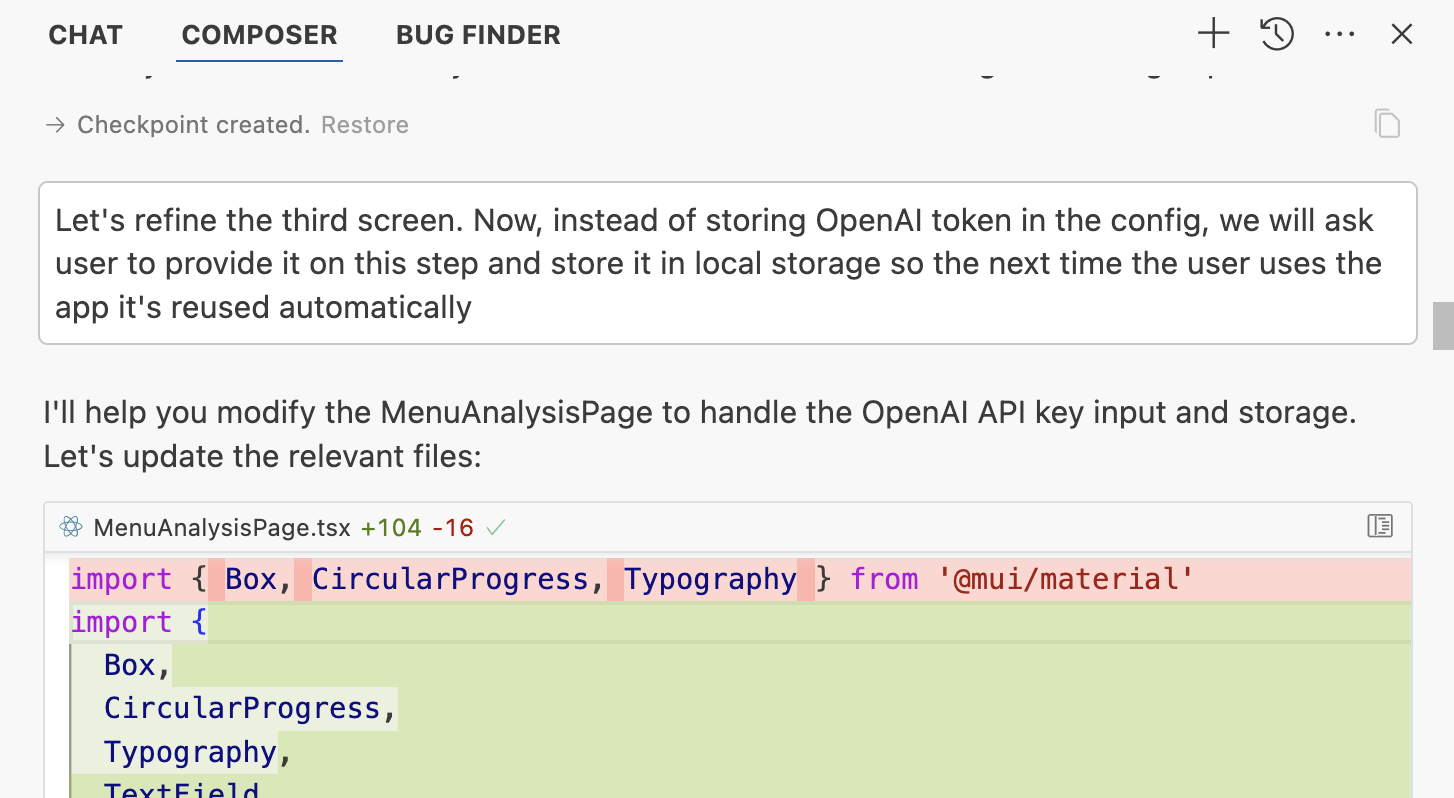

Yes, I want a completely local-first and serverless application. However, to recognize photos through ChatGPT, we need to get an OpenAI key somewhere, we can't just store it openly in the code.

So I thought that for a free prototype, asking users to enter their ChatGPT key is generally okay. Let them pay for ChatGPT as they use it. Later we'll make a paid version with our own key.

I ask Cursor to modify the menu analysis screen so that it asks for the OpenAI key and saves it in local storage for future requests.

Apply, save, apply — yes, it works! Plus vibe!

But something's still missing...

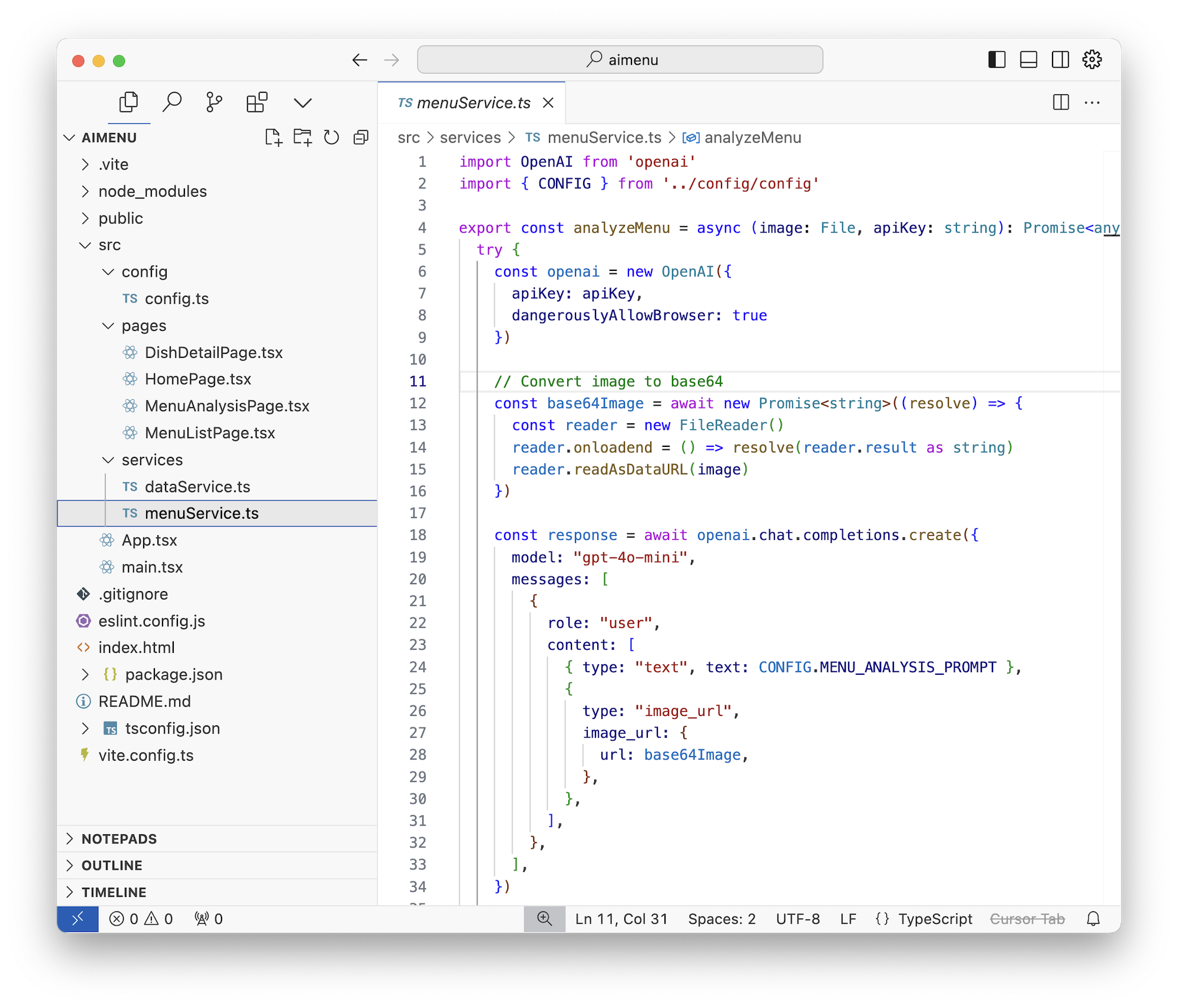

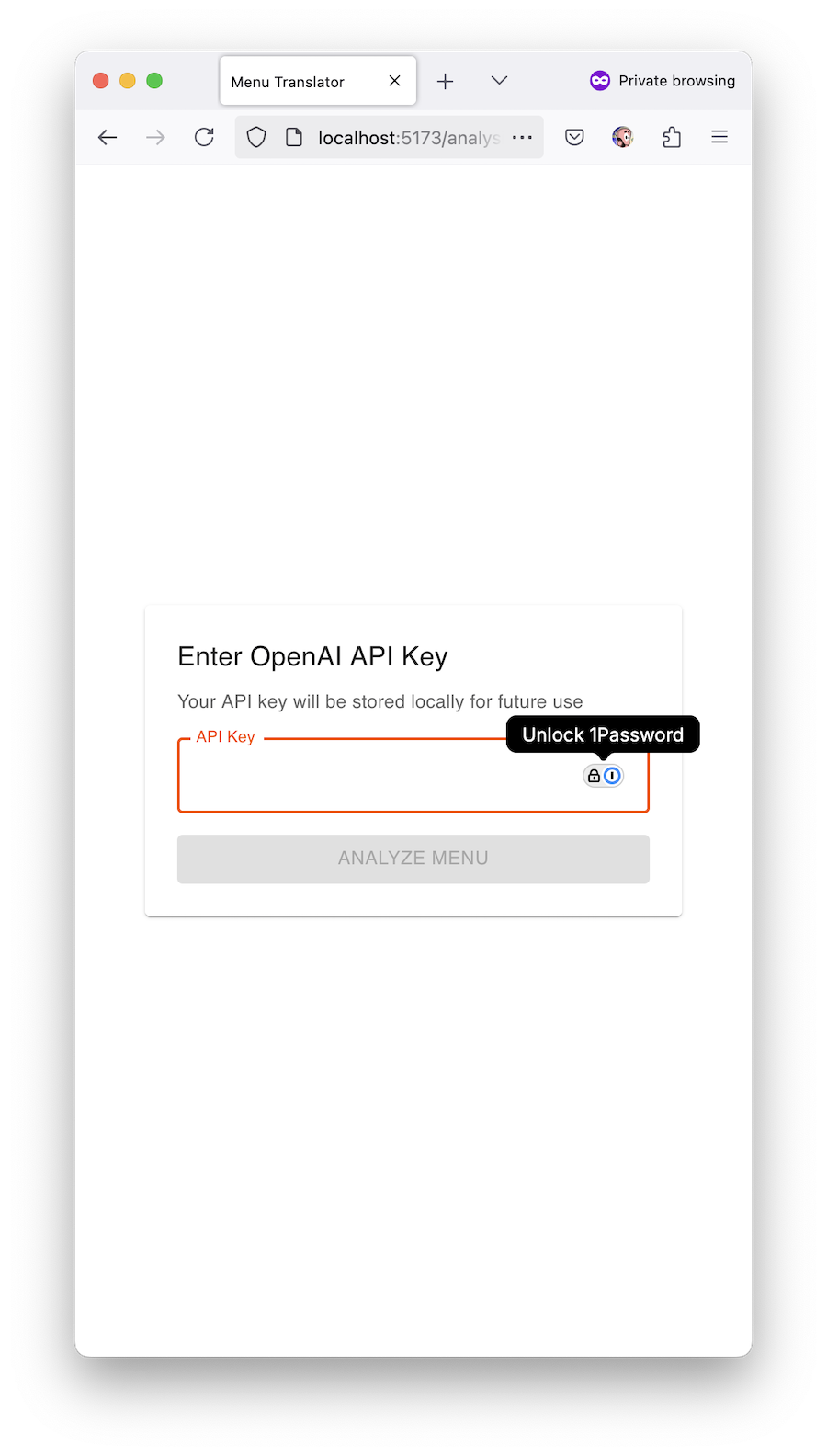

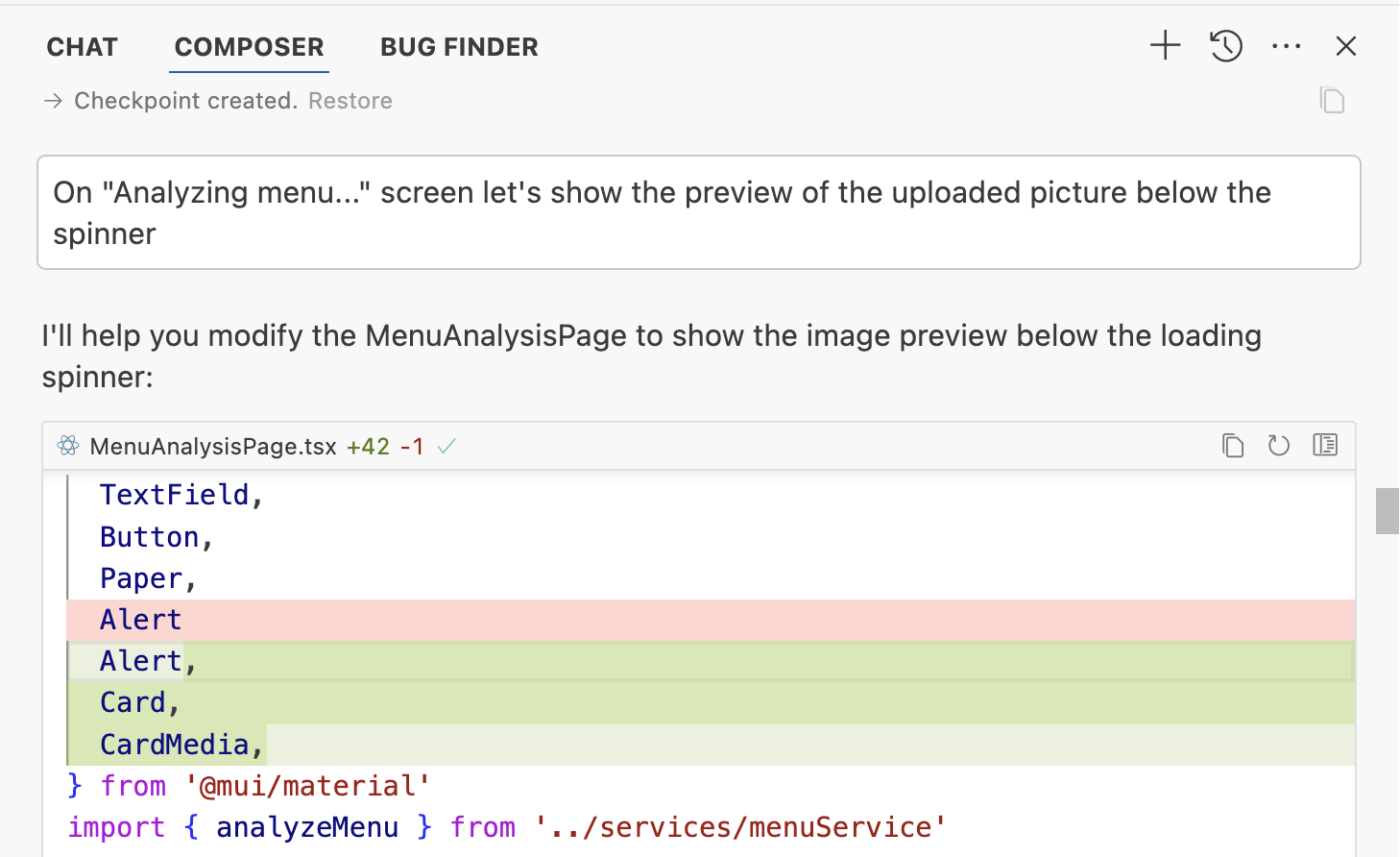

After uploading an image, we're shown the "Analyzing image..." screen for a long time, during which a lot of magic happens like converting the image to base64, uploading it through OpenAI API, and getting a lengthy JSON in response.

The process takes about 20-30 seconds — which is extremely sad for the user. They might get bored, fall into depression, go watch PornHub or join the Green Party — and that's definitely minus vibe.

The simplest UX solution during this time would be to show them the uploaded image so they can stare at it and not get bored!

Let's ask:

Now the loading screen looks more interesting. You can look at the fresh photo and try to read the names yourself while the LLMs are huffing and puffing...

Off-topic: I just realized that France is the only EU country that has its own GenAI. I'm sure it's because they also use it to read their menus!

Okay, everything kind of works, but something feels off about our new "startup"!

Probably because it looks like eye-burning crap, like it was made by IT guys.

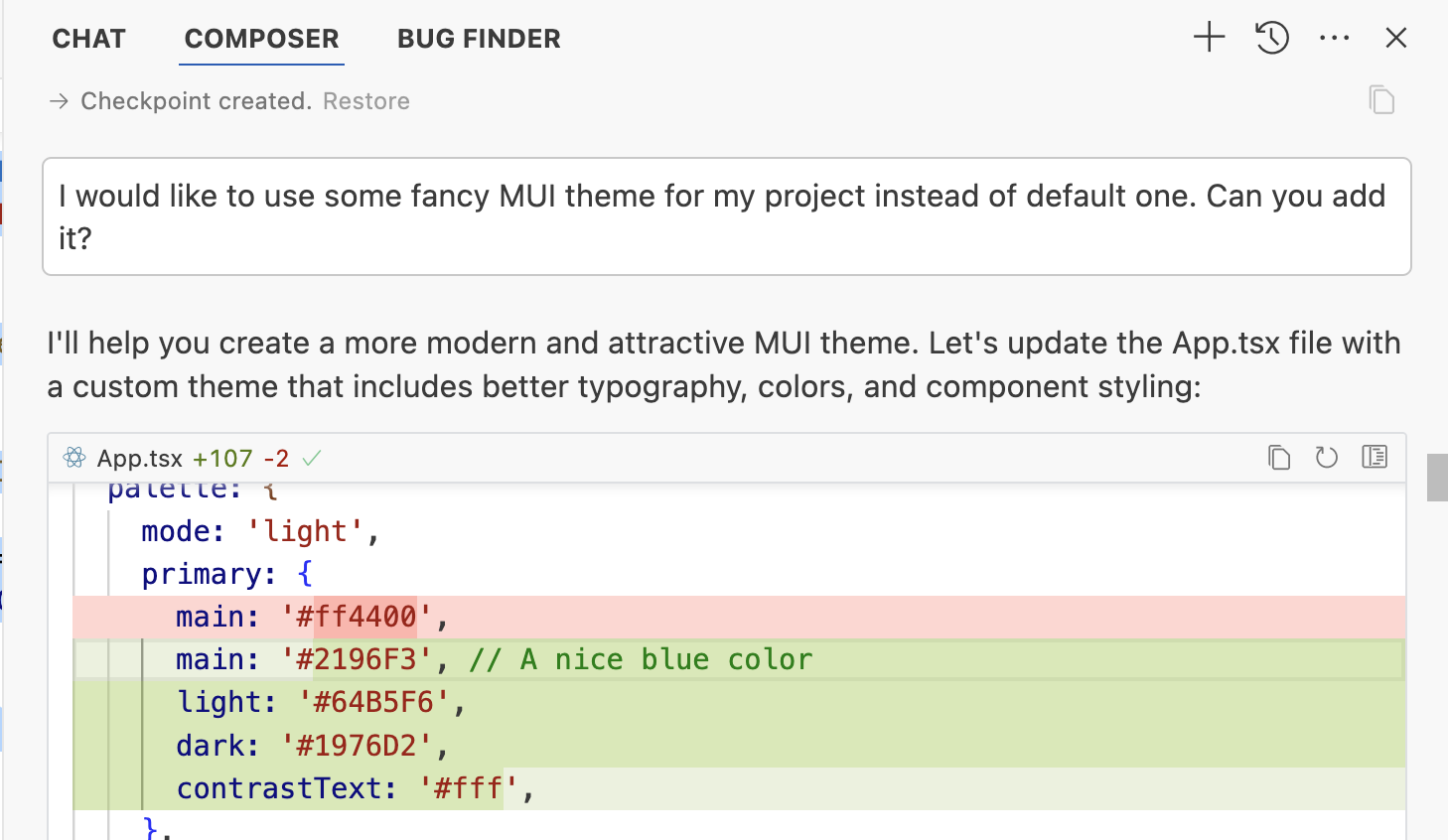

From my experience, I know that MUI by default always looks, let's say, austere. It's just waiting for you to give it a punch in the face some fun theme!

But I have no idea what themes are in fashion now, and googling "material ui themes" shows a bunch of paid templates for $66, and paying money to capitalists is minus vibe.

So we'll apply LLM magic again and just ask it: "yo our app looks like crap, give it a beautiful modern trendy theme for MUI." Don't laugh, it works.

Ma-a-a-aximum plus vibe!

Works like shit, but looks beautiful!

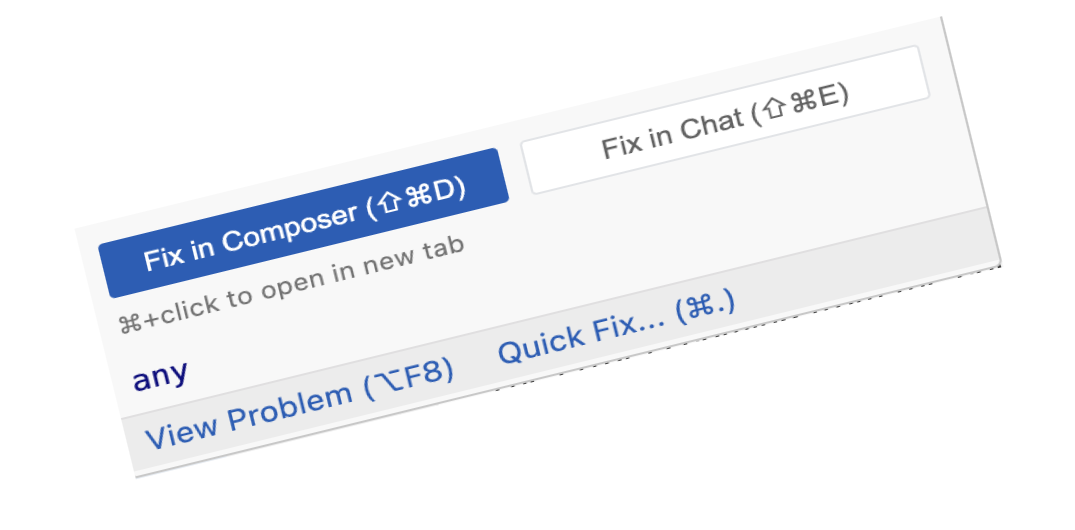

But, damn, wait. Some error popped up. But we're already so hyped on the vibe that we've downed three bottles of beer, so it's not right to figure out what happened ourselves, let the "Fix with AI" button work for us.

Now that's more like it!

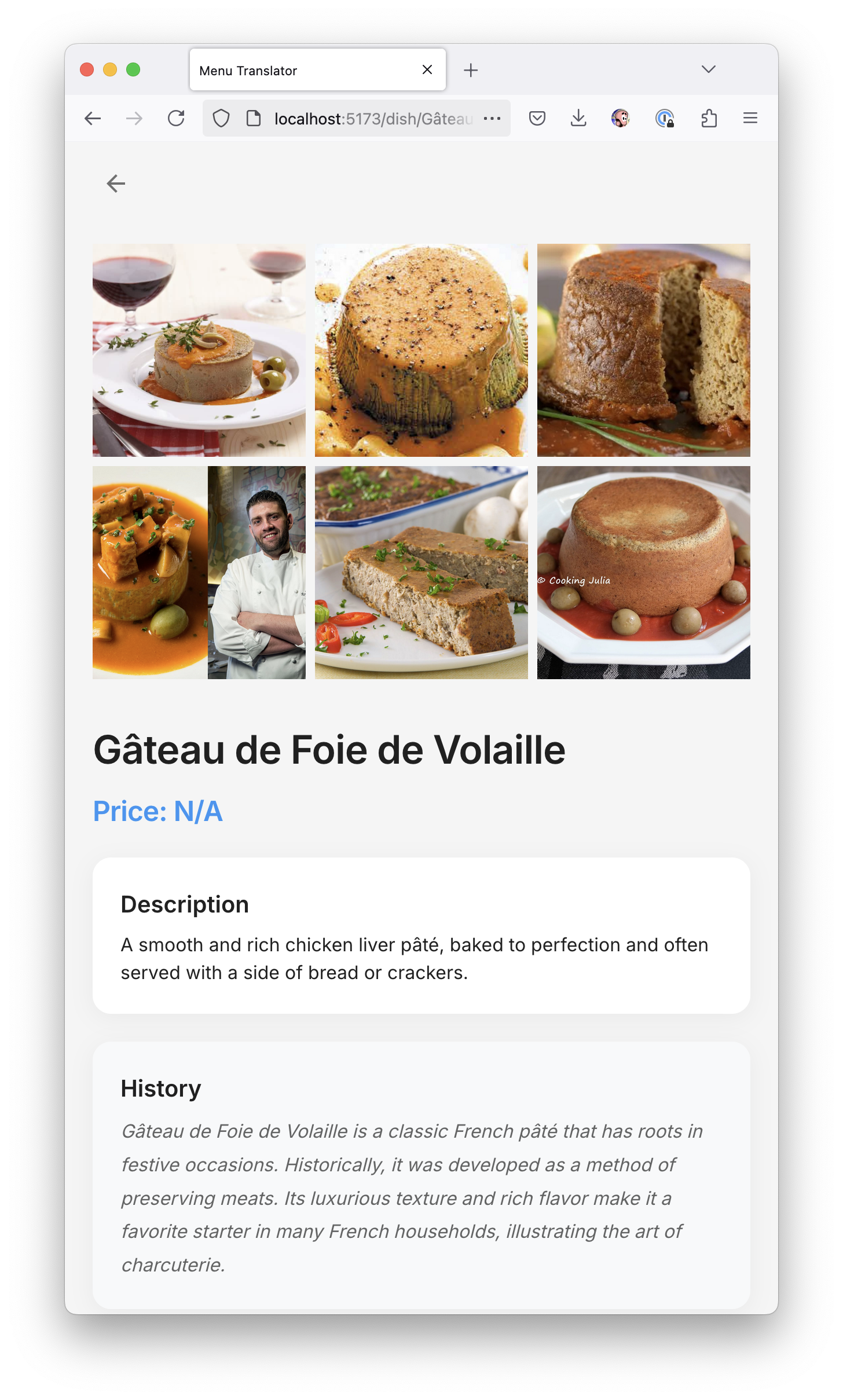

Our "startup" is almost ready to be pushed to GitHub and collect likes on Twitter. It really works as I wanted — takes a photo, takes a ChatGPT key, parses the menu, divides it into categories, shows a list of dishes with their photos, even tells about ingredients, dish history, and allergens.

We could sell a paid subscription to vegans or allergy sufferers right away. Or go crazy with B2B and generate beautiful AI menus with photos for restaurants every day.

But there's still one little nuance that prevents me from putting this all on Github Pages and making you all happy — it still uses Google Image Search, which costs $5 per 1000 requests.

I even conducted separate research with my imaginary professional friends from ChatGPT Pro. Turns out, in modern internet there really isn't an open and free API for image search.

Complete clusterfuck.

No, just think about it. We learned to launch cars into space, travel 500 km/h on trains, invented artificial intelligence, but we STILL don't have a way to freely find pictures of bacon omelet by searching for "bacon omelet". Google, Bing, and others want $5 per 1000 requests for such luxury.

1000 requests is a joke. If your menu has at least 100-200 dishes, then you have to pay Google ONE dollar for each photo. Fuck. We won't make a startup this way. Minus vibe.

I burned through 2500 of my 10K free requests just in a couple hours of development. And asking users to bring a Google API key too is dead on arrival, I spent several hours just getting one myself.

I even tried to find other solutions. I tried to forget about photos and generate menu dish images through DALL-E, but it has very draconian limits, one image takes twenty seconds to generate, and the whole menu would take a couple of hours and many dozens of dollars.

I tried using Web Scraping instead of API, but neither Sonnet nor ChatGPT helped me here — not a single search engine (not even crappy Pinterest) lets you properly scrape images by query.

And visuals are the most important thing in a startup. You can recognize French restaurateurs' scribbles as brilliantly as you want, but if you can't show pictures of their dishes — you've lost.

So I lost too. Bye!

I'll publish the code, but there won't be a demo: https://github.com/vas3k/stuff/tree/master/javascript/aimenu

Then I started playing with my new startup and asking Cursor and Sonnet to improve it more and more. I added a dataService that cached data in the model, saved it in Local Storage, and even allowed viewing scan history from it.

I expanded the prompt to make ChatGPT return me the dish's history, what region it's from, and how to eat it.

Tried to play with ranking, like asking ChatGPT to give each menu item a rating on several parameters so I could choose the most interesting dish in this restaurant. But it turned out to be crap and I gave up on that idea.

The vibe was kind of gone by this point.

Too bad we couldn't make a startup, but I still had a really great evening!

Drawing conclusions, I have three takeaways:

1. The longer you chat with LLM, the worse it writes code.

Recently read somewhere about research suggesting that LLM chats should be "restarted" occasionally because they "get dumber" as history or context size grows. In vibe coding, this is really noticeable — over time it starts to "mess up" code more than help.

If at the beginning you make literally 1-2 fixes to the generated code to make everything work, now you have to rewrite 10-20% of LLM spaghetti each time.

At some point, the balance shifts from "I'm super productive, LLM helps me code" towards "why am I spending more time fixing its mistakes than writing code."

LLM is literally coding with a junior :)

2. When a project grows beyond the "prototype" stage, it becomes easier to work with it "the old way" again.

On one hand, Cursor devs did a good job stuffing everything necessary into LLM context, including dependencies, neighboring modules, etc. If you want, you can add anything else there by referencing a file or entire folder through "@". So if it "doesn't know" something — that's a skill issue.

On the other hand, LLM context is still limited. And from the previous point, we know it's limited not so much technically (token limit is quite high in modern models) as by that floating point when the model starts magically "getting dumber."

So "painting with broad strokes" from a general chat works well on small projects (or microservices), but when they grow to at least production app level — you start spending more time cleaning code from garbage than if you had written it from scratch.

So in medium-large projects, instead of a big chat, I start using LLM editing directly within the file more often (that Cmd+K thing) and apply changes gradually, controlling each step.

Like "rewrite this function to take raw bytes instead of a file object as input" or "add a loading indicator to this react component". Well, basically standard programming with LLM, I think everyone has already switched to it.

But that's not vibe coding anymore.

3. We're all in for a ton of crappy vibe code in production.

Now vibe coding will become popular and we'll all drown in a pile of crappy prototypes and terrible quality commits on GitHub. I predict the term will acquire a super-negative connotation and vibe coders will be chased out of respectable places with piss-soaked rags.

So playing with prototypes at home is one thing, but pushing vibe code to prod is something else entirely. But like with any pop tool, it's inevitable.

If I do decide to finish this idea and make it into a production service — I'll rewrite everything from scratch. Not without AI help, of course, but under my complete control over frontend and backend.

And with paid access, of course! :3